- A examine discovered Tesla drivers are distracted whereas utilizing Autopilot

- Tesla’s Autopilot is a hands-on driver-assist system, not a hands-off system

- The examine notes extra sturdy safeguards are wanted to stop misuse

Driver-assist methods like Tesla Autopilot are supposed to scale back the frequency of crashes, however drivers are extra more likely to turn out to be distracted as they get used to them, in accordance with a brand new examine printed Tuesday by the Insurance coverage Institute for Freeway Security (IIHS).

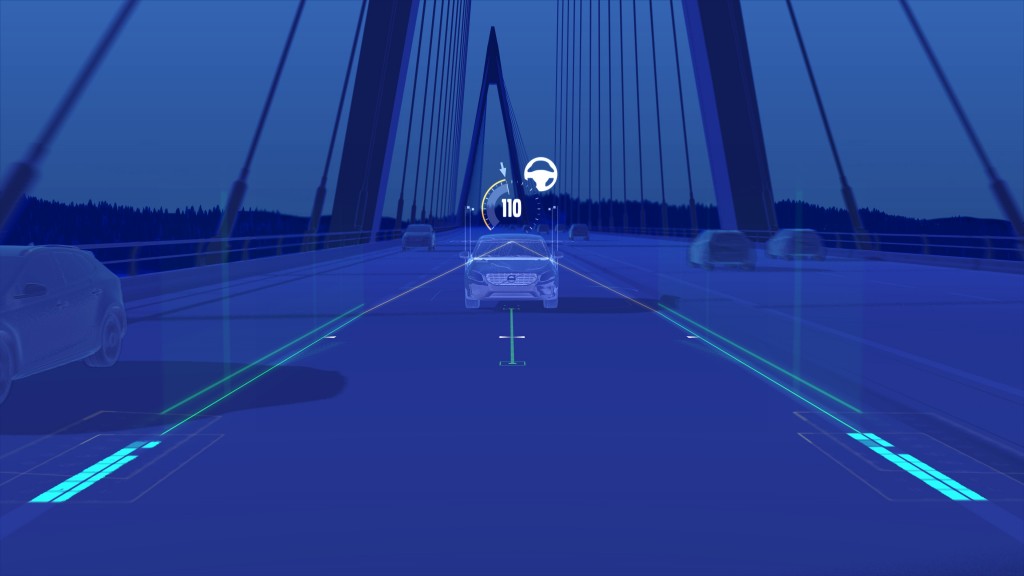

Autopilot, together with Volvo’s Pilot Help system, had been utilized in two separate research by the IIHS and the Massachusetts Institute of Expertise’s AgeLab. Each research confirmed that drivers had a bent to interact in distracting behaviors whereas nonetheless assembly the bare-minimum consideration necessities of those methods, which the IIHS refers to as “partial automation” methods.

In a single examine, researchers analyzed how the driving conduct of 29 volunteers provided with a Pilot Help-equipped 2017 Volvo S90 modified over 4 weeks. Researchers centered on how seemingly volunteers had been to interact in non-driving behaviors when utilizing Pilot Help on highways relative to unassisted freeway driving.

Pilot Help, in 2017 Volvo S90

Drivers had been more likely to “verify their telephones, eat a sandwich, or do different visual-manual actions” than when driving unassisted, the examine discovered. That tendency usually elevated over time as drivers received used to the methods, though each research discovered that some drivers engaged in distracted driving from the outset.

The second examine seemed on the driving conduct of 14 volunteers driving a 2020 Tesla Mannequin 3 geared up with Autopilot over the course of a month. For this examine, researchers picked individuals who had by no means used Autopilot or an equal system, and centered on how usually drivers triggered the system’s consideration warnings.

Researchers discovered that the Autopilot newbies “shortly mastered the timing interval of its consideration reminder function in order that they may forestall warnings from escalating into extra critical interventions” resembling emergency slowdowns or lockouts from the system.

2024 Tesla Mannequin 3

“In each these research, drivers tailored their conduct to interact in distracting actions,” IIHS President David Harvey stated in a press release. “This demonstrates why partial automation methods want extra sturdy safeguards to stop misuse.”

The IIHS declared earlier this 12 months, from a unique information set, that assisted driving methods do not enhance security, and it is advocated for extra in-car security monitoring to stop a net-negative have an effect on on security. In March 2024, it accomplished testing of 14 driver-assist methods throughout 9 manufacturers and located that almost all had been too simple to misuse. Autopilot particularly was discovered to confuse drivers into pondering it was extra succesful than it actually was.

Autopilot’s shortcomings have additionally drawn consideration from U.S. security regulators. In a 2023 recall Tesla restricted the conduct of its Full Self-Driving Beta system, which regulators known as “an unreasonable danger to motorcar security.” Tesla continues to make use of the deceptive label Full Self-Driving regardless of the system providing no such functionality.