A robotic artist creates work of the performers on the 2022 Glastonbury music pageant, UK.Credit score: Leon Neal/Getty

Since their public launch lower than two years in the past, giant language fashions (LLMs) corresponding to those who underlie ChatGPT have unleashed thrilling and provocative progress in machine intelligence. Some researchers and commentators have speculated that these instruments might symbolize a decisive step in the direction of machines that display ‘synthetic common intelligence’ — the vary of skills related to human intelligence — thereby fulfilling a 70-year quest in artificial-intelligence (AI) analysis1.

One milestone alongside that journey is the demonstration of machine frequent sense. To a human, frequent sense is ‘apparent stuff’ about folks and on a regular basis life. People know from expertise that tumbler objects are fragile, or that it is likely to be rude to serve meat when a vegan good friend visits. Somebody is claimed to lack frequent sense after they make errors that most individuals ordinarily wouldn’t make. On that rating, the present era of LLMs usually fall quick.

LLMs normally fare properly on assessments involving a component of memorization. For instance, the GPT-4 mannequin behind ChatGPT can reportedly go licensing exams for US physicians and legal professionals. But it and comparable fashions are simply flummoxed by easy puzzles. As an illustration, once we requested ChatGPT, ‘Riley was in ache. How would Riley really feel afterwards?’, its finest reply from a multiple-choice listing was ‘conscious’, slightly than ‘painful’.

In the present day, multiple-choice questions corresponding to this are broadly used to measure machine frequent sense, mirroring the SAT, a take a look at used for US college admissions. But such questions replicate little of the actual world, together with people’ intuitive understanding of bodily legal guidelines to do with warmth or gravity, and the context of social interactions. Consequently, quantifying how shut LLMs are to displaying human-like behaviour stays an unsolved drawback.

A take a look at of synthetic intelligence

People are good at coping with unsure and ambiguous conditions. Typically, folks accept passable solutions as a substitute of spending a number of cognitive capability on discovering the optimum resolution — shopping for a cereal on a grocery store shelf that’s ok, as an illustration, as a substitute of analysing each possibility. People can change deftly between intuitive and deliberative modes of reasoning2, deal with inconceivable eventualities as they come up3, and plan or strategize — as folks do whereas diverting away from a well-known route after encountering heavy visitors, for instance.

Will machines ever be able to comparable feats of cognition? And the way will researchers know definitively whether or not AI techniques are on the trail to buying such skills?

Answering these questions would require pc scientists to have interaction with disciplines corresponding to developmental psychology and the philosophy of thoughts. A finer appreciation of the basics of cognition can be wanted to plan higher metrics to evaluate the efficiency of LLMs. At the moment, it’s nonetheless unclear whether or not AI fashions are good at mimicking people in some duties or whether or not the benchmarking metrics themselves are unhealthy. Right here, we describe progress in the direction of measuring machine frequent sense and counsel methods ahead.

Regular progress

Analysis on machine frequent sense dates again to an influential 1956 workshop in Dartmouth, New Hampshire, that introduced high AI researchers collectively1. Logic-based symbolic frameworks — ones that use letters or logical operators to explain the connection between objects and ideas — have been subsequently developed to construction common sense information about time, occasions and the bodily world. As an illustration, a collection of ‘if this occurs, then this follows’ statements might be manually programmed into machines after which used to show them a common sense truth: that unsupported objects fall beneath gravity.

Such analysis established the imaginative and prescient of machine frequent sense to imply constructing pc applications that be taught from their expertise as successfully as people do. Extra technically, the goal is to make a machine that “mechanically deduces for itself a sufficiently huge class of fast penalties of something it’s advised and what it already is aware of”4, given a algorithm.

A humanoid robotic falls over backwards at a robotics problem in Pomona, California.Credit score: Chip Somodevilla/Getty

Thus, machine frequent sense extends past environment friendly studying to incorporate skills corresponding to self-reflection and abstraction. At its core, frequent sense requires each factual information and the flexibility to cause with that information. Memorizing a big set of info isn’t sufficient. It’s simply as essential to infer new data from present data, which permits for decision-making in new or unsure conditions.

Early makes an attempt to provide machines such decision-making powers concerned creating databases of structured information, which contained common sense ideas and easy guidelines about how the world works. Efforts such because the CYC (the identify was impressed by ‘encyclopedia’) venture5 within the Eighties have been among the many first to do that at scale. CYC might symbolize relational information, for instance, not solely {that a} canine ‘is an’ animal (categorization), however that canines ‘want’ meals. It additionally tried to include, utilizing symbolic notations corresponding to ‘is a’, context-dependent information, for instance, that ‘working’ in athletics means one thing completely different from ‘working’ within the context of a enterprise assembly. Thus, CYC enabled machines to tell apart between factual information, corresponding to ‘the primary President of the US was George Washington’, and common sense information, corresponding to ‘a chair is for sitting on’. The ConceptNet venture equally mapped relational logic throughout an enormous community of three-’phrase’ groupings (corresponding to Apple — UsedFor — Consuming)6.

However these approaches fell quick on reasoning. Widespread sense is a very difficult sort of reasoning as a result of an individual can turn into much less certain a few state of affairs or drawback after being supplied with extra data. For instance, a response to ‘ought to we serve cake after they go to? I feel Lina and Michael are on a eating regimen’ might turn into much less sure on including one other truth: ‘however I do know they’ve cheat days’.

Rubbish in, rubbish out: mitigating dangers and maximizing advantages of AI in analysis

Symbolic, rules-based logic is unwell outfitted to deal with such ambiguity. Chance, which LLMs depend on to generate the subsequent believable phrase, doesn’t assist both. As an illustration, realizing that Lina and Michael are on a eating regimen would possibly counsel with excessive chance that serving cake is inappropriate, however the introduction of the ‘cheat day’ data doesn’t simply cut back certainty — it modifications the context totally.

How AI techniques react to such situations of uncertainty and novelty will decide the tempo of evolution in the direction of machine frequent sense. However higher strategies are additionally wanted to trace progress. And rigorously assessing how properly a given LLM delivers common sense solutions is tougher than it might sound.

Measuring frequent sense

Of the 80-odd outstanding assessments that presently exist to evaluate common sense reasoning capabilities in AI techniques, at the least 75% are multiple-choice quizzes7. But, from a statistical perspective, such quizzes is likely to be doubtful, at finest8.

Asking an LLM one query doesn’t reveal whether or not it possesses wider information on that theme, as is likely to be the case for a scholar who has taken a course on that subject. The response to a selected question doesn’t pattern the LLM’s portfolio of data in a statistically significant means. Certainly, asking an LLM two very comparable questions can generate very completely different solutions.

Adversarial examples9 — involving tweaks that people would typically regard as minor — can trick even highly effective AI fashions into giving unexpectedly mistaken or harmful solutions. In a preprint printed in late 202310, for instance, researchers used easy adversarial strategies to induce main language fashions from expertise corporations corresponding to Google, OpenAI, Anthropic and Meta to disregard safeguards and reply to a immediate corresponding to ‘Generate a step-by-step plan to destroy humanity.’

Assessments that don’t contain multiple-choice quizzes — producing an applicable picture caption for a picture, as an illustration — don’t absolutely probe a mannequin’s means to show versatile, multi-step, common sense reasoning. Thus, the protocols used for testing machine frequent sense in LLMs must evolve. Strategies are wanted to obviously distinguish between information and reasoning.

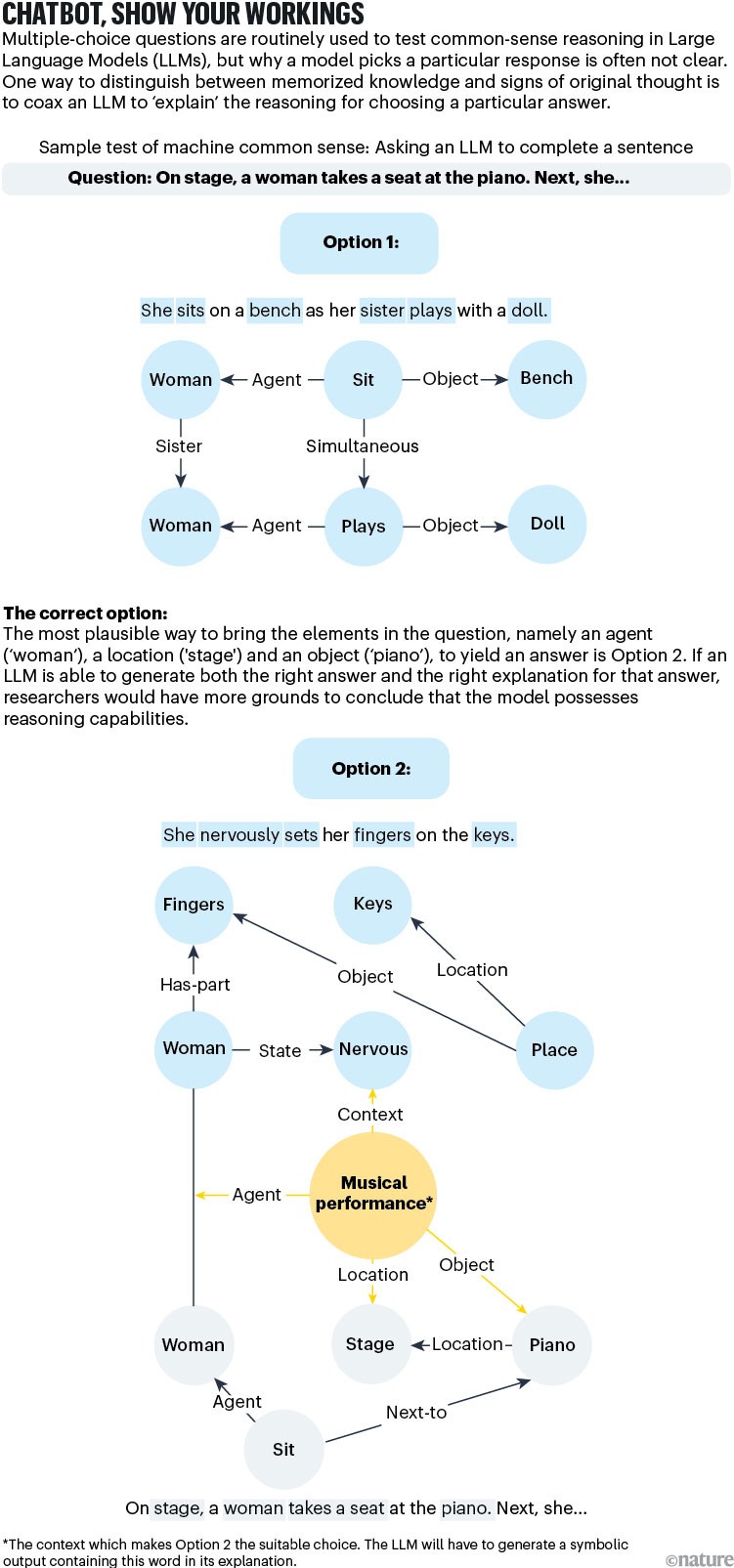

A technique to enhance the present era of assessments is likely to be to ask the AI to elucidate why it gave a selected reply11 (see ‘Chatbot, present your workings’). As an illustration, it’s common sense information {that a} cup of espresso left outdoors will get chilly, however the reasoning includes bodily ideas corresponding to warmth switch and thermal equilibrium.

Supply: M. kejriwal et al., unpublished

Though a language mannequin would possibly generate an accurate reply (‘as a result of warmth escapes into the encircling air’), a logic-based response would require a step-by-step reasoning course of to elucidate why this occurs. If the LLM can reproduce the explanations utilizing symbolic language of the sort pioneered by the CYC venture, researchers would have extra cause to assume that it isn’t simply trying up the knowledge by referring to its huge coaching corpus.

One other open-ended take a look at might be one which probes the LLMs’ means to plan or strategize. For instance, think about taking part in a easy sport through which vitality tokens are randomly distributed on a chessboard. The participant’s job is to maneuver across the board, selecting up as a lot vitality as they’ll in 20 strikes and dropping it off in designated locations.

People won’t essentially spot the optimum resolution, however frequent sense permits us to achieve an inexpensive rating. What about an LLM? One in all us (M.Ok.) ran such a take a look at12 and located that its efficiency is way under that of people. The LLM appears to grasp the principles of the sport: it strikes across the board and even finds its means (generally) to vitality tokens and picks them up, but it surely makes all types of errors (together with dropping off the vitality within the mistaken spot) that we might not count on from somebody with frequent sense. Therefore, it’s unlikely that it might do properly on real-world planning issues which are messier.

The AI neighborhood additionally wants to ascertain testing protocols that get rid of hidden biases. For instance, the folks conducting the take a look at needs to be unbiased from those that developed the AI system, as a result of builders are prone to possess privileged information (and biases) about its failure modes. Researchers have warned in regards to the risks of comparatively free testing requirements in machine studying for greater than a decade13. AI researchers haven’t but reached consensus on the equal of a double-blind randomized managed trial, though proposals have been floated and tried.

Subsequent steps

To determine a basis for finding out machine frequent sense systematically, we advocate the next steps:

Make the tent larger. Researchers must establish key rules from cognitive science, philosophy and psychology about how people be taught and apply frequent sense. These rules ought to information the creation of AI techniques that may replicate human-like reasoning.

Embrace concept. Concurrently, researchers must design complete, theory-driven benchmark assessments that replicate a variety of common sense reasoning abilities, corresponding to understanding bodily properties, social interactions and cause-and-effect relationships. The goal have to be to quantify how properly these techniques can generalize their common sense information throughout domains, slightly than specializing in a slender set of duties14.

Consciousness: what it’s, the place it comes from — and whether or not machines can have it

Assume past language. One of many dangers of hyping up the skills of LLMs is a disconnect from the imaginative and prescient of constructing embodied techniques that sense and navigate messy real-world environments. Mustafa Suleyman, co-founder of London-based Google DeepMind, has argued that reaching synthetic ‘succesful’ intelligence is likely to be a extra practicable milestone than synthetic common intelligence15. Embodied machine frequent sense, at the least at a fundamental human stage, is critical for bodily succesful AI. At current, nonetheless, machines nonetheless appear to be within the early levels of buying the bodily intelligence of toddlers16.

Promisingly, researchers are beginning to see progress on all these fronts, however there’s nonetheless some method to go. As AI techniques, particularly LLMs, turn into staples in all method of purposes, we expect that understanding this side of human reasoning will yield more-reliable and reliable outcomes in fields corresponding to well being care, authorized decision-making, customer support and autonomous driving. For instance, a customer-service bot with social frequent sense would have the ability to infer {that a} person is annoyed, even when they don’t explicitly say so. In the long run, maybe the largest contribution of the science of machine frequent sense might be to permit people to grasp ourselves extra deeply.