Duties similar to literature overview may be made simpler with AI instruments, however they should be used with warning.Credit score: Olena Hromova/Alamy

Synthetic-intelligence (AI) instruments are reworking the best way we work. Many merchandise try to make scientific analysis extra environment friendly by serving to researchers to kind by way of giant volumes of literature.

These scientific engines like google are primarily based on giant language fashions (LLMs) and are designed to sift by way of present analysis papers and summarize the important thing findings. AI corporations are always updating their fashions’ options and new instruments are usually being launched.

Nature spoke to builders of those instruments and researchers who use them to garner tips about find out how to apply them — and pitfalls to be careful for.

What instruments can be found?

A few of the hottest LLM-based instruments embody Elicit, Consensus and You, which provide numerous methods to hurry up literature overview.

When customers enter a analysis query into Elicit, it returns lists of related papers and summaries of their key findings. Customers can ask additional questions on particular papers, or filter by journal or examine sort.

AI science engines like google are exploding in quantity — are they any good?

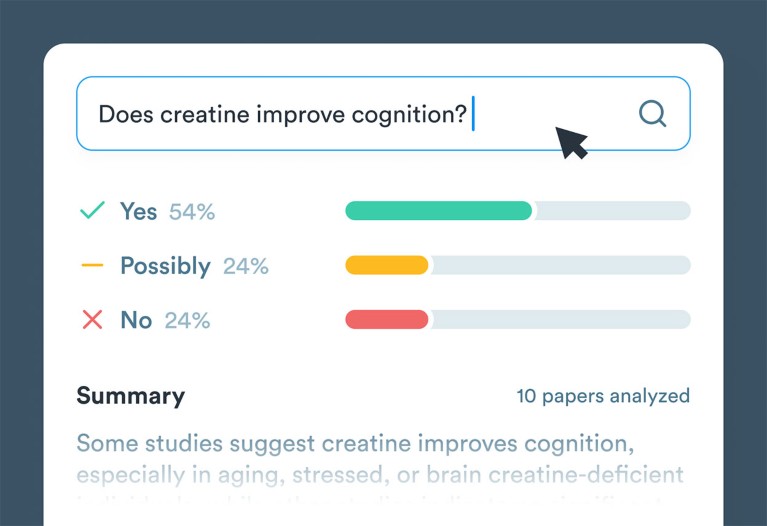

Consensus helps researchers to grasp the number of scientific info on a subject. Customers can enter questions similar to ‘Can ketamine deal with melancholy?’, and the software offers a ‘consensus meter’ exhibiting the place scientific settlement lies. Researchers can learn summaries of papers that agree, disagree or are uncertain in regards to the speculation. Eric Olson, the chief government of Consensus in Boston, Massachusetts, says that the AI software doesn’t substitute in-depth interrogation of papers, however it’s helpful for high-level scanning of research.

You, a software program growth firm in Palo Alto, California, says that it was the primary search engine to include AI search with up-to-date quotation information for research. The software provides customers other ways to discover analysis questions, for example its ‘genius mode’ provides solutions in charts. Final month, You launched a ‘multiplayer software’ that enables colleagues to collaborate and share customized AI chats that may automate particular duties, similar to reality checking.

Consensus can provide some concept of the place scientific settlement lies on a specific matter or query. Credit score: Consensus

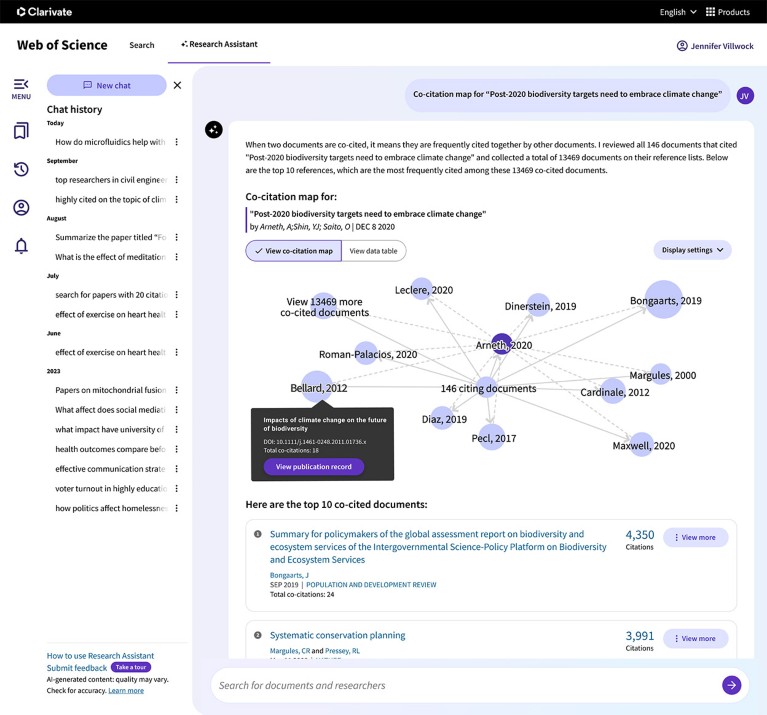

Clarivate, a analysis analytics firm headquartered in London, launched their AI-powered analysis assistant in September, which permits customers to shortly search by way of the Internet of Science database. Scientists can enter a analysis query and think about related summary summaries, associated subjects and quotation maps, which present the papers that every examine cites and can assist researchers to establish key literature, Clarivate says.

And though papers within the Internet of Science are in English, Clarivate’s AI software may also summarize paper abstracts in numerous languages. “Language translation baked into giant language fashions has large potential to even out scientific literature around the globe,” says Francesca Buckland, vice-president of product at Clarivate, who is predicated in London.

BioloGPT is one in every of a rising variety of subject-specific AI instruments and produces abstract and in-depth solutions to organic questions.

Which instruments go well with which duties?

“I at all times say that it is determined by what you actually wish to do,” says Razia Aliani, an epidemiologist in Calgary, Canada, when requested about one of the best AI search engine instruments to make use of.

When she wants to grasp the consensus or range of opinion on a subject, Aliani gravitates in the direction of Consensus.

Aliani, who additionally works on the systematic-review firm Covidence, makes use of different AI instruments when reviewing giant databases. For instance, she has used Elicit to fine-tune her analysis pursuits. After inputting an preliminary analysis query, Aliani makes use of Elicit to exclude irrelevant papers and delve deeper into more-relevant ones.

Aliani says that AI search instruments don’t simply save time, they can assist with “enhancing the standard of labor, sparking creativity and even discovering methods to make duties much less nerve-racking”.

Clarivate’s AI software produces quotation maps exhibiting the papers cited by every examine.Credit score: Internet of Science, Clarivate

Anna Mills teaches introductory writing lessons on the School of Marin in San Francisco, California, together with classes on the analysis course of. She says that it’s tempting to introduce her college students to those instruments, however she’s involved that they might hinder the scholars’ understanding of scholarly analysis. As an alternative, she’s eager to show college students how AI search instruments make errors, to allow them to develop the abilities to “critically assess what these AI programs are giving them”.

“A part of being a great scientist is being sceptical about every little thing, together with your personal strategies,” says Conner Lambden, BiologGPT’s founder, who is predicated in Golden, Colorado.

What about inaccurate solutions and misinformation?

Issues abound in regards to the accuracy of the outputs of main AI chatbots, similar to ChatGPT, which may ‘hallucinate’ false info and invent references.

3 ways ChatGPT helps me in my tutorial writing

That’s led to some scepticism about science engines like google — and researchers needs to be cautious, say customers. Widespread errors that AI analysis instruments face embody making up statistics, misrepresenting cited papers and biases primarily based on these instruments’ coaching programs.

The problems that sports activities scientist Alec Thomas has skilled when utilizing AI instruments have led him to desert their use. Thomas, who’s on the College of Lausanne in Switzerland, beforehand appreciated AI search instruments, however stopped utilizing them after discovering “some very critical, primary errors”. As an illustration, when researching how individuals with consuming problems are affected in the event that they take up a sport, an AI software summarized a paper that it mentioned was related, however in actuality “it had nothing to do with the unique question”, he says. “We wouldn’t belief a human that’s identified to hallucinate, so why would we belief an AI?” he says.

How are builders addressing inaccurate solutions?

The builders that Nature spoke to say they’ve applied safeguards to enhance accuracy. James Brady, head of engineering at Elicit in Oakland, California, says that the agency takes accuracy significantly, and it’s utilizing a number of security programs to examine for errors in solutions.

Buckland says that the Internet of Science AI software has “sturdy safeguards”, to stop the inclusion of fraudulent and problematic content material. Throughout beta-testing, the workforce labored with round 12,000 researchers to include suggestions, she says.

AI chatbots are coming to engines like google — are you able to belief the outcomes?

Though such suggestions improves consumer expertise, Olson says that this, too, may affect hallucinations. AI search instruments are “skilled on human suggestions, they wish to present a great reply to people”, says Olson. So “they’ll fill in gaps of issues that aren’t there”.

Andrew Hoblitzell, a generative AI researcher in Indianapolis, Indiana, who lectures at universities by way of a programme referred to as AI4All, thinks that AI search instruments can help the analysis course of, offering that scientists confirm the data generated. “Proper now, these instruments needs to be utilized in a hybrid method moderately than a definitive supply.”