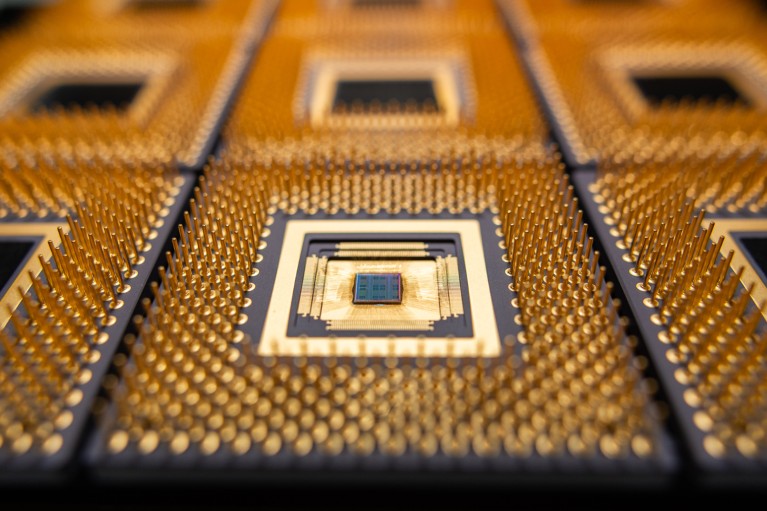

A sophisticated ‘in reminiscence’ computing chip developed by researchers from Princeton College, New Jersey, and US agency EnCharge AI.

Credit score: Scott Lyon

Within the late Nineteen Nineties, some laptop scientists realized that they have been hurtling in the direction of catastrophe. Makers of laptop chips had been in a position to make computer systems extra highly effective on a dependable schedule by cramming ever extra and smaller digital switches, referred to as transistors, onto the chips’ processing cores and working them at ever better speeds. But when speeds stored growing, the vitality consumption of central processing models (CPUs) would have change into unsustainable.

So producers modified tack: slightly than attempting to toggle the transistors on and off sooner, they added a number of processing cores to their chips. Dividing a process throughout two or extra cores working at slower speeds introduced extra energy-efficient efficiency good points. The expertise big IBM launched the primary mainstream multicore laptop processor in 2001; different main chipmakers of the day, similar to Intel and AMD, quickly adopted. Multicore chips drove continued progress in computing, making doable immediately’s laptops and smartphones.

Nature Outlook: Robotics and synthetic intelligence

Now, some laptop scientists say that the sphere is going through one other reckoning, because of the growing adoption of energy-hungry synthetic intelligence (AI). Generative AI can create photographs and movies, summarize notes and write papers. However these capabilities are the results of machine-learning fashions that eat super quantities of vitality.

The vitality required to coach and function these fashions might spell hassle for each the setting and progress in machine studying. “To decrease the facility consumption is essential — in any other case we’ll see growth cease,” says Hechen Wang, a analysis scientist at Intel Labs in Hillsboro, Oregon. Roy Schwartz, a pc scientist on the Hebrew College of Jerusalem, Israel, says he doesn’t need AI to change into “a wealthy man’s device”. The workers, infrastructure and significantly the facility required to coach generative AI fashions might restrict who can create and use them — and solely a handful of entities have the assets, he says.

At this level of potential disaster, many {hardware} designers see a possibility to remake the essential blueprint of laptop chips to make them extra vitality environment friendly. Doing so is not going to solely assist AI to work extra effectively in knowledge centres, but in addition allow extra AI duties to be carried out straight on private units, for which battery life is commonly essential. Nevertheless, to persuade business to embrace such giant architectural modifications, researchers must present vital advantages.

Energy up

In response to the

Worldwide Power Company

(IEA), in 2022, knowledge centres consumed 1.65 billion gigajoules of electrical energy — about 2% of world demand. Widespread deployment of AI will solely enhance electrical energy use. By 2026, the company initiatives that knowledge centres’ vitality consumption can have elevated by between 35% and 128% — quantities equal to including the annual vitality consumption of Sweden on the decrease estimate or Germany on the high finish.

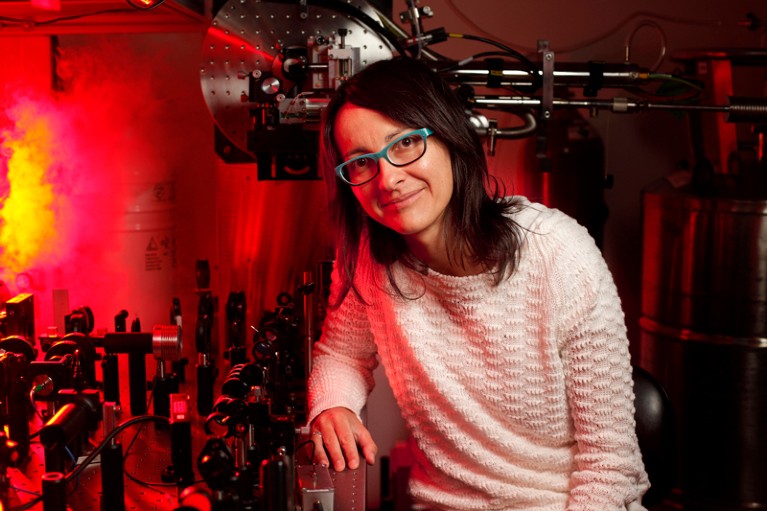

Jelena Vuckovic developed a ‘waveguide’ that would enhance vitality effectivity of chips.

Credit score: Craig Lee

One potential driver of this enhance is the shift to AI-powered net searches. The exact consumption of current AI algorithms is difficult to pin down, however in line with the IEA, a typical request to chatbot ChatGPT consumes 10 kilojoules — roughly ten occasions as a lot as a traditional Google search.

Corporations appear to have calculated that these vitality prices are a worthy funding. Google’s 2024

environmental report

revealed its carbon emissions have elevated by 48% in 5 years. In Might, Microsoft president Brad Smith in Redmond, Washington, stated that the corporate’s emissions had risen by 30% since 2020. Though no person needs an enormous vitality invoice, firms that make AI fashions are centered on attaining the most effective outcomes. “Normally folks don’t care about vitality effectivity if you’re coaching the world’s largest mannequin,” says Naresh Shanbhag, a pc engineer on the College of Illinois Urbana–Champaign.

The excessive vitality consumption related to coaching and working AI fashions is due largely to the reliance of those fashions on enormous databases, and the price of shifting these knowledge between computing and reminiscence, in and between chips. When coaching a big AI mannequin, as much as 90% of the vitality is spent accessing reminiscence, says Subhasish Mitra, a pc scientist at Stanford College in California. A machine-learning mannequin able to figuring out fruits in images is educated by exhibiting the mannequin as many instance photographs as doable, and this entails shifting an incredible quantity of information out and in of reminiscence, repeatedly. Equally, pure language processing fashions usually are not made by programming the principles of English grammar — as a substitute, a few of these fashions can have been educated by exposing them to a lot of the English-language materials on the Web. “As mindblowing because it may appear, we’re near exhausting all textual content ever written by people,” Schwartz says. Once more, which means coaching requires giant quantities of information to be moved out and in of 1000’s of graphics processing models (GPUs).

The fundamental design of immediately’s computing methods, with separate processing and reminiscence models, isn’t properly fitted to this mass motion of information. “The largest downside is the reminiscence wall,” says Mitra.

Tearing down the wall

The GPU is a well-liked selection for growing AI fashions. William Dally, chief scientist at Nvidia in Santa Clara, California, says that the corporate has improved the performance-per-watt of its GPUs 4,000-fold over the previous ten years. The corporate continues to develop specialised circuits in these chips, referred to as accelerators, which are designed for the sorts of calculation utilized in AI, however he doesn’t count on drastic architectural modifications. “I believe GPUs are right here to remain,” he says.

Introducing new supplies, new processes and wildly completely different designs right into a semiconductor business that’s projected to succeed in US$1 trillion in worth by 2030 is a prolonged and difficult course of. For firms such us Nvidia to take the dangers, researchers might want to present main advantages. However to some researchers, the necessity for change is already clear.

They are saying that GPUs gained’t be capable of supply sufficient effectivity enhancements to handle AI’s rising vitality consumption — and that they plan to have high-performance applied sciences that may prepared within the years forward. “There are a lot of start-ups and semiconductor firms exploring alternate choices,” says Shanbhag. These new architectures are more likely to first make their approach into smartphones, laptops and wearable units. That is the place the advantages of recent expertise, similar to with the ability to fine-tune AI fashions on the machine utilizing localized, private knowledge, are clearest. And the place AI’s vitality wants are most limiting.

Computing may appear summary, however there are bodily forces at work. Any time that electrons transfer by way of chips, some vitality is dissipated as warmth. Shanbhag is among the early builders of a type of structure that seeks to attenuate this wastage. Referred to as computing in reminiscence, these approaches embrace methods similar to putting an island of reminiscence inside a computing core. This protects vitality just by shortening the space knowledge should journey. Researchers are additionally attempting completely different approaches to computing, similar to performing some operations within the reminiscence itself.

To work within the energy-constrained setting of a transportable machine, some laptop scientists are exploring what may sound like an enormous step backward: analogue computing. The digital units which were synonymous with computing for the reason that mid-twentieth century function in a crisp world of on or off, represented as 1s and 0s. Analogue units work with the in-between, and might retailer extra knowledge in a given space as a result of they’ve entry to a variety of states — extra computing bang is on the market from a given chip space.

States in an analogue machine may be numerous types of a crystal in a phase-change reminiscence cell, or a continuum of cost ranges in a resistive wire. As a result of the distinction between analogue states may be smaller than these between the extensively separated 1 and 0, it takes much less vitality to change between them. “Analogue has larger vitality effectivity,” says Intel’s Wang. The draw back is that it’s noisy, missing the readability of sign that makes digital computation sturdy. Wang says that AI fashions referred to as neural networks are inherently tolerant to a sure degree of error, and he’s exploring tips on how to steadiness this trade-off. Some groups are engaged on digital in-memory computing, which avoids this difficulty however may not supply the vitality advantages of analogue approaches.

Naveen Verma, {an electrical} engineer at Princeton College in New Jersey and founder and chief government of start-up agency EnCharge AI in Santa Clara, expects that early purposes for in-memory computing will probably be in laptops. EnCharge AI’s chips make use of static random-access reminiscence (SRAM), which makes use of crossed metallic wires as capacitors to retailer knowledge within the type of completely different quantities of cost. SRAM may be made on silicon chips utilizing current processes, he says.

The chips, that are analogue, can run machine-learning algorithms at 150 tera operations per second (TOPS) per watt, in contrast with 24 TOPS per watt by an equal Nvidia chip performing an equal process. By upgrading to a semiconductor course of expertise that may hint finer chip options, Verma expects his expertise’s vitality effectivity metric to triple to about 650 TOPS per watt.

Larger firms are additionally exploring in-memory computing. In 2023, IBM described

1

an early analogue AI chip that would carry out matrix multiplication at 12.4 TOPS per watt. Dally says that Nvidia researchers have explored in-memory computing as properly, however cautions that good points in vitality effectivity may not be as nice as they appear. These methods may use much less energy to do matrix multiplications, however the vitality value of changing knowledge from digital to analogue and different overheads eat into these good points on the system degree. “I haven’t seen any concept that might make it considerably higher,” Dally says.

IBM’s Burns agrees that the vitality value of digital-to-analogue conversion is a significant problem. He says that the hot button is deciding whether or not the info ought to keep in analogue type when they’re moved between components of the chip, or whether or not it’s it higher to switch them in 1s and 0s. “What occurs if we attempt to keep in analogue as a lot as doable?” he says.

Wang says that a number of years in the past he wouldn’t have anticipated such fast progress on this discipline. However now he expects to see start-up corporations bringing in-memory computing chips to market over the subsequent few years.

A brighter future

The AI-energy downside has additionally invigorated work in photonics. Knowledge journey extra effectively when encoded in mild than alongside electrical wires, which is why optical fibres are used to carry excessive pace Web to neighbourhoods, and even to attach banks of servers in knowledge centres. Nevertheless, bringing these connections onto chips has been difficult — optical units have traditionally been cumbersome and delicate to small temperature variations.

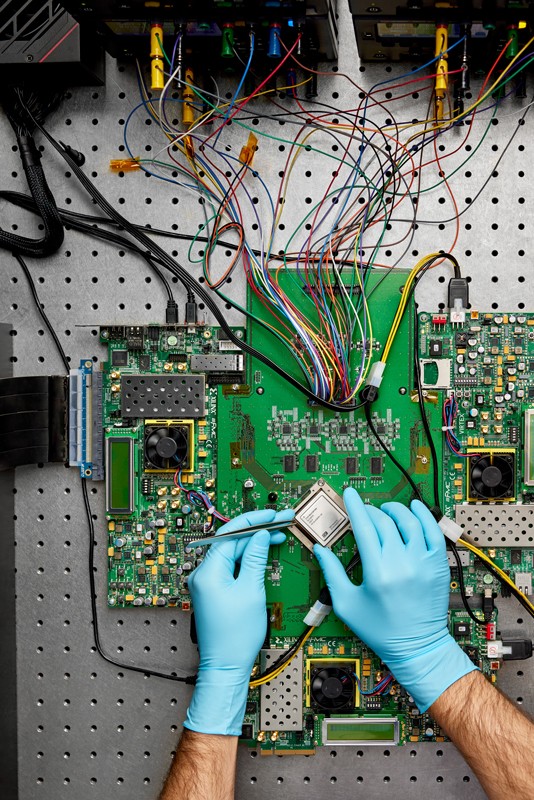

Hechen Wang checks the vitality effectivity of a pc chip.

Credit score: Hechen Wang

In 2022, Jelena Vuckovic, {an electrical} engineer at Stanford College, developed a silicon waveguide for optical knowledge transmission between chips

2

. Losses throughout digital knowledge transmission are on the order of 1 picojoule per bit of information (one picojoule is 10

−12

joules); for optics, it’s lower than 100 femtojoules per bit (one femtojoule is 10

−15

joules). Meaning Vuckovic’s machine can transmit knowledge at a given pace for about 10% of the vitality value of doing so electronically. The optical waveguide can even carry knowledge on 400 channels by making the most of 100 completely different wavelengths of sunshine and utilizing optical interference to create 4 modes of transmission.

Vuckovic says that within the brief time period, optical waveguides might present extra energy-efficient connections between GPUs — she thinks that speeds of 10 terabytes per second will probably be doable. Some researchers hope to make use of optics not simply to transmit knowledge, but in addition to compute. In April, engineer Lu Fang at Tsinghua College in Beijing and her crew described a photonic AI chip that they are saying can generate tunes within the type of German composer Johann Sebastian Bach and pictures within the type of Norwegian painter Edvard Munch whereas utilizing much less vitality than a GPU would

3

. “That is the primary optical AI system that would deal with large-scale general-purpose intelligence computing,” says Zhihao Xu, who’s a member of Fang’s lab. Referred to as Taichi, this technique can carry out 160 TOPS per watt, which Xu says is a two to a few orders of magnitude enchancment in vitality effectivity in contrast with a GPU.

Fang’s group is engaged on miniaturizing the system, which at the moment takes up about one sq. metre. Nevertheless, Vuckovic expects that progress in all-optical AI will probably be hampered by the necessity to convert super quantities of digital knowledge into optical variations — a undertaking that might carry its personal vitality value and could possibly be impractical.

Stacking up

Stanford’s Mitra says that his dream computing system would have the entire reminiscence and computing on the identical chip. Right this moment’s chips are largely planar, however Mitra predicts that chips made up of 3D stacked computing and reminiscence layers will probably be doable. These can be primarily based on rising supplies that may be sandwiched, similar to carbon-nanotube circuits. The nearer bodily proximity between reminiscence and computing components affords about 10–15% enhancements in vitality use, however Mitra thinks that this may be pushed a lot additional.

Analogue AI chips are doubtlessly extra vitality environment friendly than digital chips.

Credit score: Ryan Lavine for IBM

The massive problem going through 3D stacking is the necessity to change how chips are fabricated, which Mitra admits is a tall order. Chips are at the moment made largely of silicon at extraordinarily excessive temperatures. However 3D chips of the sort Mitra is designing have to be made in milder situations, in order that the constructing of 1 layer doesn’t blast out the one beneath. Mitra’s crew has proven it’s doable, layering a chip primarily based on carbon nanotubes and resistive RAM (a type of reminiscence expertise) on high of a silicon chip. This preliminary machine, offered in 2023, has the identical efficiency and energy necessities as an identical chip primarily based on silicon expertise

4

.

Decreasing vitality consumption significantly would require shut collaboration between {hardware} and software program engineers. One strategy to save vitality is to in a short time flip off areas of the reminiscence that aren’t in use in order that they don’t leak energy, then flip them again on when they’re wanted. Mitra says that he’s seen huge pay-offs when his crew collaborates intently with programmers. As an illustration, when his crew requested them to remember that writing to a reminiscence cell of their machine prices extra vitality than does studying from it, they designed a coaching algorithm that yielded a 340-times enchancment in system-level vitality delay product (an effectivity metric that takes into consideration each vitality consumption and execution pace). “Within the outdated mannequin, the algorithms folks don’t have to know something concerning the {hardware},” says Mitra. That’s not the case any extra.

“I believe there’s going to be a convergence, the place the chips get extra environment friendly and highly effective, and the fashions are going to get extra environment friendly and fewer useful resource intensive,” says Raghavendra Selvan, a machine-learning researcher on the College of Copenhagen.

In relation to coaching fashions, programmers could possibly be extra selective. As an alternative of constant to coach fashions on gigantic quantities of information, programmers may make extra good points by coaching on smaller, tailor-made databases, saving vitality and doubtlessly getting a greater mannequin. “We have to assume creatively,” Selvan says.

Schwartz is exploring the thought of saving vitality by working small, ‘low-cost’ fashions a number of occasions as a substitute of an costly one as soon as. His group at Hebrew College has seen some good points from this strategy when utilizing a big language mannequin to generate code

5

. “If it generates ten outputs, and one in all them passes, you’re higher off working the smaller mannequin than the bigger one,” he says.

Selvan, who has developed a device for predicting the carbon footprint of deep-learning fashions, referred to as

CarbonTracker

, needs laptop scientists to assume extra holistically concerning the prices of AI. Like Schwartz, he sees some prepared fixes that don’t have anything to do with subtle chip applied sciences. Corporations might, as an example, schedule AI coaching runs throughout occasions when electrical energy is being supplied by renewable sources.

Certainly, assist from the businesses utilizing this expertise will probably be essential to fixing the issue. If AI chips change into extra vitality environment friendly, they could merely be used extra. To forestall this, some researchers are calling for better transparency from the businesses behind machine-learning fashions. “We now not know what these firms are doing — what’s the measurement of the device, what was it educated on,” Schwartz says.

Sasha Luccioni, an AI researcher and local weather lead at US agency Hugging Face, who relies in Montreal, Canada, needs mannequin makers to offer extra data on how AI fashions are educated, how a lot vitality they use and what algorithm is working when a person queries a search engine or pure language device. “We needs to be imposing transparency,” she says.

Schwartz says that, between 2018 and 2022, the computational prices of coaching machine-learning fashions elevated by an element of ten yearly. “If we observe the identical path we’ve been on for years, it’s gloom and doom,” says Mitra. “However the alternatives are enormous.