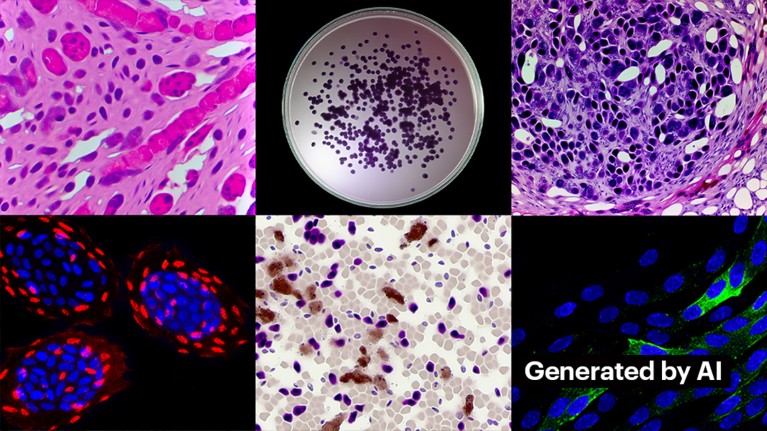

All of those pictures had been generated by AI.Credit score: Proofig AI, 2024

From scientists manipulating figures to the mass manufacturing of faux papers by paper mills, problematic manuscripts have lengthy plagued the scholarly literature. Science sleuths work tirelessly to uncover this misconduct to right the scientific file. However their job is changing into tougher, owing to the introduction of a robust new device for fraudsters: generative synthetic intelligence (AI).

“Generative AI is evolving very quick,” says Jana Christopher, an image-integrity analyst at FEBS Press in Heidelberg, Germany. “The those that work in my area — picture integrity and publication ethics — are getting more and more anxious in regards to the potentialities that it provides.”

AI-generated pictures and video are right here: how might they form analysis?

The benefit with which generative-AI instruments can create textual content, pictures and information raises fears of an more and more untrustworthy scientific literature awash with faux figures, manuscripts and conclusions which are tough for people to identify. Already, an arms race is rising as integrity specialists, publishers and expertise firms race to develop AI instruments that may help in quickly detecting misleading, AI-generated components of papers.

“It’s a scary growth,” Christopher says. “However there are additionally intelligent individuals and good structural modifications which are being urged.”

Analysis-integrity specialists say that, though AI-generated textual content is already permitted by many journals below some circumstances, the usage of such instruments for creating pictures or different information is much less more likely to be seen as acceptable. “Within the close to future, we could also be okay with AI-generated textual content,” says Elisabeth Bik, an image-forensics specialist and marketing consultant in San Francisco, California. “However I draw the road at producing information.”

What ChatGPT and generative AI imply for science

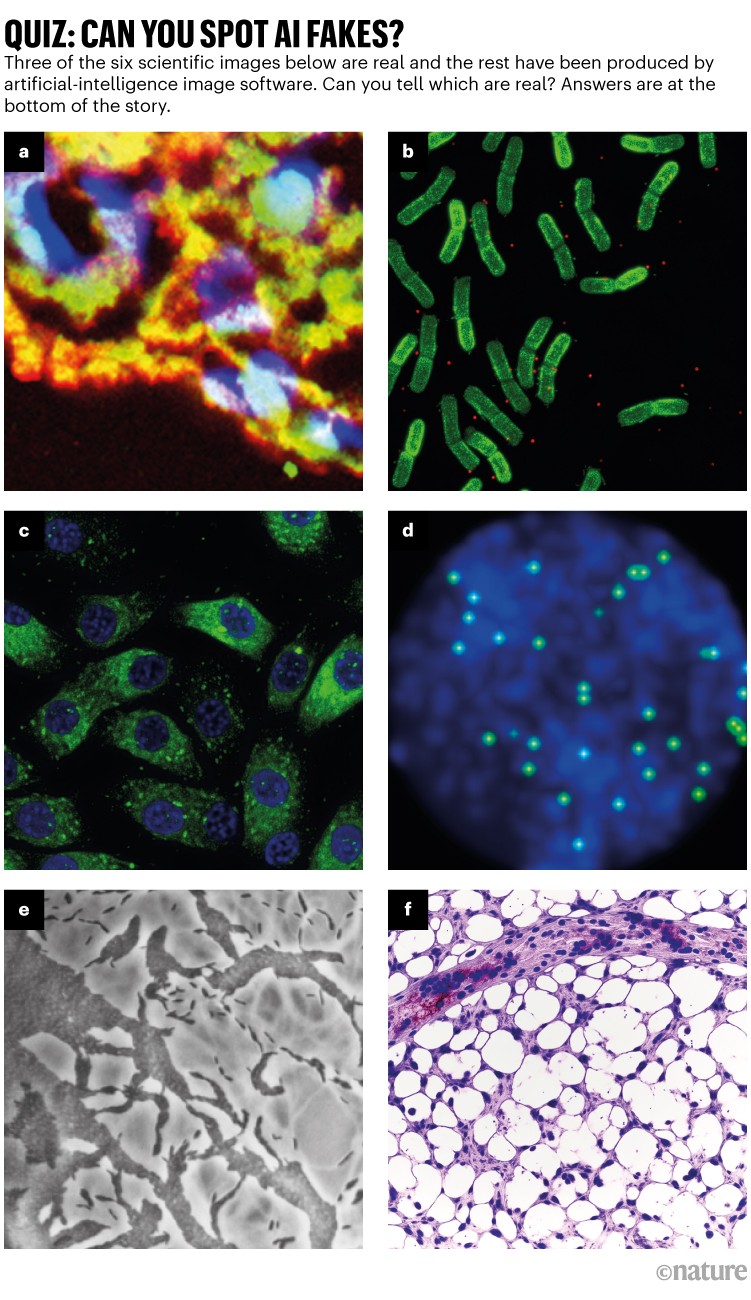

Bik, Christopher and others suspect that information, together with pictures, fabricated utilizing generative AI are already widespread within the literature, and that paper mills are profiting from AI instruments to provide manuscripts en masse (see ‘Quiz: can you notice AI fakes?’).

Underneath the radar

Pinpointing AI-produced pictures poses an enormous problem: they’re typically nearly inconceivable to differentiate from actual ones, not less than with the bare eye. “We get the sensation that we encounter AI-generated pictures day-after-day,” Christopher says. “However so long as you’ll be able to’t show it, there’s actually little or no you are able to do.”

There are some clear situations of generative-AI use in scientific pictures, such because the now-infamous determine of a rat with absurdly massive genitalia and nonsensical labels, created utilizing the picture device Midjourney. The graphic, revealed by a journal in February, sparked a social-media storm and was retracted days later.

Credit score: Proofig (generated pictures)

Most instances aren’t so apparent. Figures fabricated with Adobe Photoshop or related instruments earlier than the rise of generative-AI — particularly in molecular and cell biology — typically include telltale indicators that sleuths can spot, corresponding to similar backgrounds or an uncommon absence of smears or stains. AI-made figures typically lack such indicators. “I see tonnes of papers the place I believe, these Western blots don’t look actual — however there’s no smoking gun,” Bik says. “You possibly can solely say they only look bizarre, and that after all isn’t sufficient proof to jot down to an editor.”

However indicators counsel that AI-made figures are showing in revealed manuscripts. Textual content written utilizing instruments corresponding to ChatGPT is on the rise in papers, given away by commonplace chatbot phrases that authors overlook to take away and telltale phrases that AI fashions have a tendency to make use of. “So we now have to imagine that it’s additionally taking place for information and for pictures,” says Bik.

One other clue that fraudsters are utilizing refined picture instruments is that a lot of the points that sleuths are at present detecting are in papers which are a number of years previous. “Prior to now couple of years, we’ve seen fewer and fewer picture issues,” Bik says. “I believe most folk who’ve gotten caught doing picture manipulation have moved on to creating cleaner pictures.”

Find out how to create pictures

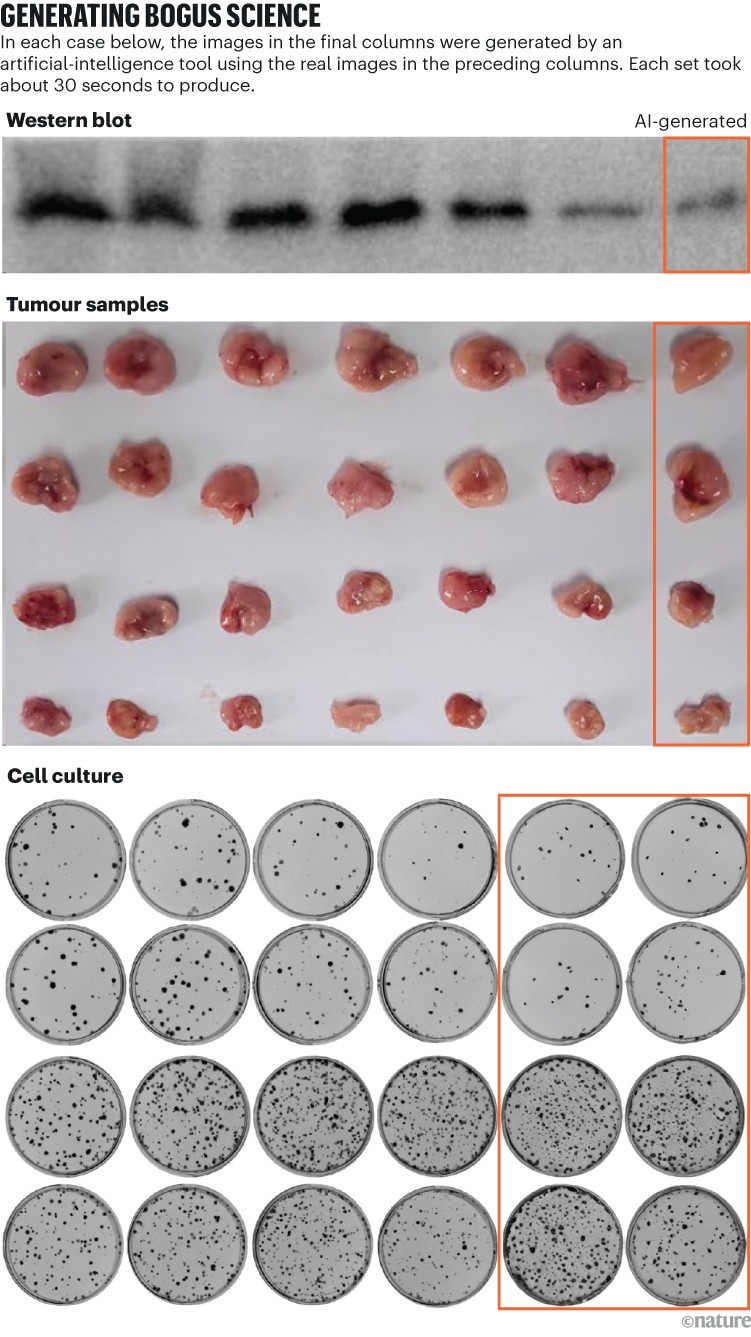

Creating clear pictures utilizing generative AI just isn’t tough. Kevin Patrick, a scientific-image sleuth often called Cheshire on social media, has demonstrated simply how straightforward it may be and posted his outcomes on X. Utilizing Photoshop’s AI device Generative Fill, Patrick created sensible pictures — that would feasibly seem in scientific papers — of tumours, cell cultures, Western blots and extra. A lot of the pictures took lower than a minute to provide (see ‘Producing bogus science’).

“If I can do that, definitely the people who find themselves getting paid to generate faux information are going to be doing this,” Patrick says. “There’s in all probability a complete bunch of different information that might be generated with instruments like this.”

Some publishers say that they’ve discovered proof of AI-generated content material in revealed research. These embody PLoS, which has been alerted to suspicious content material and located proof of AI-generated textual content and information in papers and submissions via inner investigations, says Renée Hoch, managing editor of PLoS’s publication-ethics crew in San Francisco, California. (Hoch notes that AI use just isn’t forbidden in PLoS journals, and that its AI coverage focuses on writer accountability and clear disclosures.)

Credit score: Kevin Patrick

Different instruments may additionally present alternatives for individuals wishing to create faux content material. Final month, researchers revealed1 a generative-AI mannequin for creating high-resolution microscopy pictures — and a few integrity specialists have raised involved in regards to the work. “This expertise can simply be utilized by individuals with unhealthy intentions to shortly generate tons of or hundreds of faux pictures,” Bik says.

Yoav Shechtman on the Technion–Israel Institute of Expertise in Haifa, the device’s creator, says that the device is useful for producing coaching information for fashions as a result of high-resolution microscopy pictures are tough to acquire. However, he provides, it isn’t helpful for producing faux as a result of customers have little management over the output. Current imaging software program corresponding to Photoshop is extra helpful for manipulating figures, he suggests.

Hunting down fakes

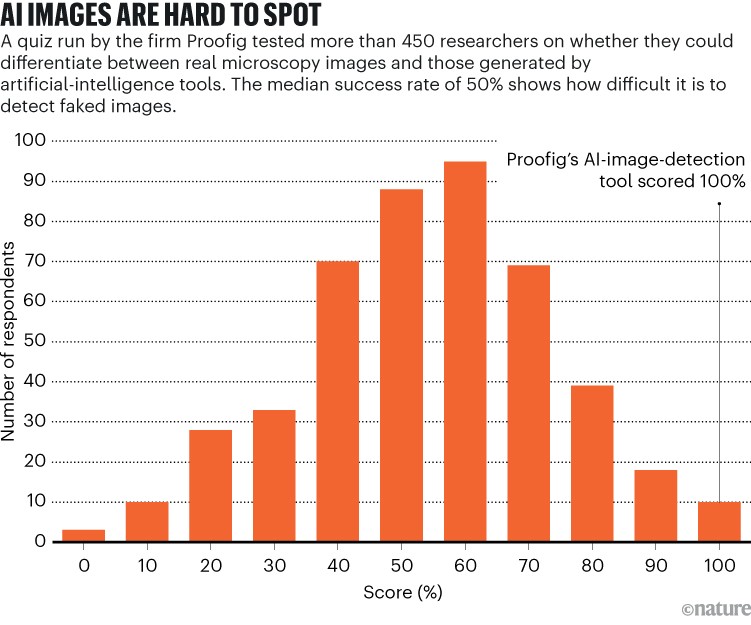

Human eyes may not be capable to catch generative AI-made pictures, however AI would possibly (see ‘AI pictures are onerous to identify’).

The makers behind instruments corresponding to Imagetwin and Proofig, which use AI to detect integrity points in scientific figures, are increasing their software program to weed out pictures created by generative AI. As a result of such pictures are so tough to detect, each firms are creating their very own databases of generative-AI pictures to coach their algorithms.

AI fashions fed AI-generated information shortly spew nonsense

Proofig has already launched a function in its device for detecting AI-generated microscopy pictures. Firm co-founder Dror Kolodkin-Gal in Rehovot, Israel, says that, when examined on hundreds of AI-generated and actual pictures from papers, the algorithm recognized AI pictures 98% of the time and had a 0.02% false-positive fee. Dror provides that the crew is now engaged on attempting to grasp what, precisely, their algorithm detects.

“I’ve nice hopes for these instruments,” Christopher says. However she notes that their outputs will all the time have to be assessed by an professional who can confirm the problems they flag. Christopher hasn’t but seen proof that AI image-detection software program are dependable (Proofig’s inner analysis has not been revealed). These instruments are “restricted, however definitely very helpful, because it means we will scale up our effort of screening submissions,” she provides.

Supply: Proofig quiz

A number of publishers and analysis establishments already use Proofig and Imagetwin. The Science journals, for instance, use Proofig to scan for image-integrity points. In keeping with Meagan Phelan, communications director for Science in Washington DC, the device has not but uncovered any AI-generated pictures.

Springer Nature, which publishes Nature, is creating its personal detection instruments for textual content and pictures, referred to as Geppetto and SnapShot, which flag irregularities which are then assessed by people. (The Nature information crew is editorially impartial of its writer.)

Fraudsters, beware

Publishing teams are additionally taking steps to deal with AI-made pictures. A spokesperson for the Worldwide Affiliation of Scientific, Technical and Medical (STM) Publishers in Oxford, UK, stated that it’s taking the issue “very severely” and pointed to initiatives corresponding to United2Act and the STM Integrity Hub, that are tackling paper mills and different scientific-integrity points.

ChatGPT one yr on: who’s utilizing it, how and why?

Christopher, who’s chairing an STM working group on picture alterations and duplications, says that there’s a rising realization that creating methods to confirm uncooked information — corresponding to labelling pictures taken from microscopes with invisible watermarks akin to these being utilized in AI-generated textual content — may be the best way ahead. It will require new applied sciences and new requirements for tools producers, she provides.

Patrick and others are anxious that publishers is not going to act shortly sufficient to deal with the risk. “We’re involved that it will simply be one other era of issues within the literature that they don’t get to till it’s too late,” he says.

Nonetheless, some are optimistic that the AI-generated content material that enters papers at the moment will likely be found sooner or later.

“I’ve full confidence that expertise will enhance to the purpose that it might detect the stuff that’s getting accomplished at the moment — as a result of sooner or later, it is going to be seen as comparatively crude,” Patrick says. “Fraudsters shouldn’t sleep nicely at night time. They might idiot at the moment’s course of, however I don’t suppose they’ll be capable to idiot the method perpetually.”