When Sam Rodriques was a neurobiology graduate pupil, he was struck by a elementary limitation of science. Even when researchers had already produced all the data wanted to know a human cell or a mind, “I’m undecided we’d comprehend it”, he says, “as a result of no human has the power to know or learn all of the literature and get a complete view.”

5 years later, Rodriques says he’s nearer to fixing that downside utilizing synthetic intelligence (AI). In September, he and his group on the US start-up FutureHouse introduced that an AI-based system that they had constructed might, inside minutes, produce syntheses of scientific information that had been extra correct than Wikipedia pages1. The group promptly generated Wikipedia-style entries on round 17,000 human genes, most of which beforehand lacked an in depth web page.

How AI-powered science engines like google can pace up your analysis

Rodriques just isn’t the one one turning to AI to assist synthesize science. For many years, students have been making an attempt to speed up the onerous job of compiling our bodies of analysis into opinions. “They’re too lengthy, they’re extremely intensive they usually’re typically old-fashioned by the point they’re written,” says Iain Marshall, who research analysis synthesis at King’s School London. The explosion of curiosity in giant language fashions (LLMs), the generative-AI applications that underlie instruments equivalent to ChatGPT, is prompting recent pleasure about automating the duty.

Among the newer AI-powered science engines like google can already assist individuals to supply narrative literature opinions — a written tour of research — by discovering, sorting and summarizing publications. However they’ll’t but produce a high-quality assessment by themselves. The hardest problem of all is the ‘gold-standard’ systematic assessment, which includes stringent procedures to go looking and assess papers, and sometimes a meta-analysis to synthesize the outcomes. Most researchers agree that these are a good distance from being absolutely automated. “I’m certain we’ll finally get there,” says Paul Glasziou, a specialist in proof and systematic opinions at Bond College in Gold Coast, Australia. “I simply can’t inform you whether or not that’s 10 years away or 100 years away.”

On the similar time, nonetheless, researchers worry that AI instruments might result in extra sloppy, inaccurate or deceptive opinions polluting the literature. “The concern is that each one the many years of analysis on the best way to do good proof synthesis begins to be undermined,” says James Thomas, who research proof synthesis at College School London.

Laptop-assisted opinions

Laptop software program has been serving to researchers to go looking and parse the analysis literature for many years. Nicely earlier than LLMs emerged, scientists had been utilizing machine-learning and different algorithms to assist to establish specific research or to rapidly pull findings out of papers. However the introduction of programs equivalent to ChatGPT has triggered a frenzy of curiosity in dashing up this course of by combining LLMs with different software program.

AI science engines like google are exploding in quantity — are they any good?

It might be terribly naive to ask ChatGPT — or every other AI chatbot — to easily write an instructional literature assessment from scratch, researchers say. These LLMs generate textual content by coaching on huge quantities of writing, however most business AI companies don’t reveal what knowledge the fashions had been educated on. If requested to assessment analysis on a subject, an LLM equivalent to ChatGPT is probably going to attract on credible educational analysis, inaccurate blogs and who is aware of what different info, says Marshall. “There’ll be no weighing up of what probably the most pertinent, high-quality literature is,” he says. And since LLMs work by repeatedly producing statistically believable phrases in response to a question, they produce totally different solutions to the identical query and ‘hallucinate’ errors — together with, notoriously, non-existent educational references. “Not one of the processes that are considered good follow in analysis synthesis happen,” Marshall says.

A extra subtle course of includes importing a corpus of pre-selected papers to an LLM, and asking it to extract insights from them, basing its reply solely on these research. This ‘retrieval-augmented era’ appears to chop down on hallucinations, though it doesn’t stop them. The method can be arrange in order that the LLM will reference the sources it drew its info from.

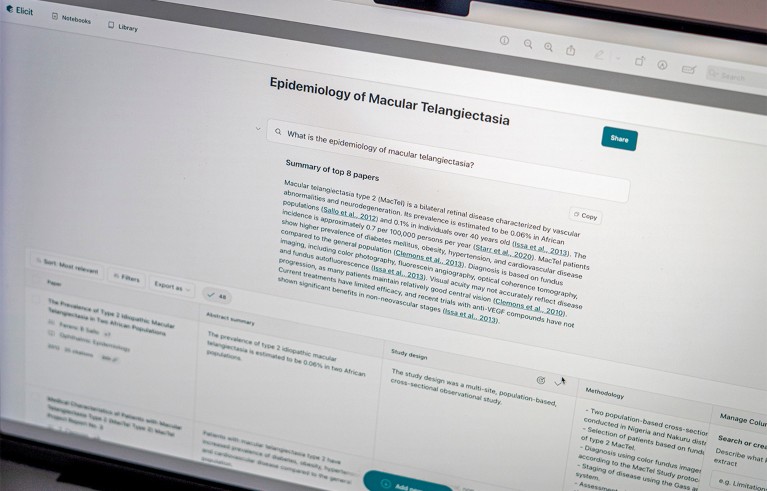

That is the premise for specialised, AI-powered science engines like google equivalent to Consensus and Elicit. Most firms don’t reveal actual particulars of how their programs work. However they sometimes flip a consumer’s query right into a computerized search throughout educational databases equivalent to Semantic Scholar and PubMed, returning probably the most related outcomes.

An LLM then summarizes every of those research and synthesizes them into a solution that cites its sources; the consumer is given numerous choices to filter the work they wish to embrace. “They’re engines like google at the start,” says Aaron Tay, who heads knowledge providers at Singapore Administration College and blogs about AI instruments. “On the very least, what they cite is certainly actual.”

These instruments “can definitely make your assessment and writing processes environment friendly”, says Mushtaq Bilal, a postdoctoral researcher on the College of Southern Denmark in Odense, who trains teachers in AI instruments and has designed his personal, referred to as Analysis Kick. One other AI system referred to as Scite, for instance, can rapidly generate an in depth breakdown of papers that help or refute a declare. Elicit and different programs can even extract insights from totally different sections of papers — the strategies, conclusions and so forth. There’s “an enormous quantity of labour that you could outsource”, Bilal says.

Elicit, like a number of AI-powered instruments, goals to assist with educational literature opinions by summarising papers and extracting knowledge.Credit score: Nature

However most AI science engines like google can’t produce an correct literature assessment autonomously, Bilal says. Their output is extra “on the degree of an undergraduate pupil who pulls an all-nighter and comes up with the details of some papers”. It’s higher for researchers to make use of the instruments to optimize elements of the assessment course of, he says. James Brady, head of engineering at Elicit, says that its customers are augmenting steps of reviewing “to nice impact”.

One other limitation of some instruments, together with Elicit, is that they’ll solely search open-access papers and abstracts, moderately than the total textual content of articles. (Elicit, in Oakland, California, searches about 125 million papers; Consensus, in Boston, Massachusetts, appears at greater than 200 million.) Bilal notes that a lot of the analysis literature is paywalled and it’s computationally intensive to go looking a number of full textual content. “Working an AI app by way of the entire textual content of thousands and thousands of articles will take a number of time, and it’ll grow to be prohibitively costly,” he says.

Full-text search

For Rodriques, cash was in plentiful provide, as a result of FutureHouse, a non-profit group in San Francisco, California, is backed by former Google chief government Eric Schmidt and different funders. Based in 2023, FutureHouse goals to automate analysis duties utilizing AI.

May AI provide help to to write down your subsequent paper?

This September, Rodriques and his group revealed PaperQA2, FutureHouse’s open-source, prototype AI system1. When it’s given a question, PaperQA2 searches a number of educational databases for related papers and tries to entry the total textual content of each open-access and paywalled content material. (Rodriques says the group has entry to many paywalled papers by way of its members’ educational affiliations.) The system then identifies and summarizes probably the most related parts. Partially as a result of PaperQA2 digests the total textual content of papers, operating it’s costly, he says.

The FutureHouse group examined the system by utilizing it to generate Wikipedia-style articles on particular person human genes. They then gave a number of hundred AI-written statements from these articles, together with statements from actual (human-written) Wikipedia articles on the identical matter, to a blinded panel of PhD and postdoctoral biologists. The panel discovered that human-authored articles contained twice as many ‘reasoning errors’ — through which a written declare just isn’t correctly supported by the quotation — than did ones written by the AI device. As a result of the device outperforms individuals on this means, the group titled its paper ‘Language brokers obtain superhuman synthesis of scientific information’.

The group at US start-up FutureHouse, which has launched AI programs to summarize scientific literature. Sam Rodriques, their director and co-founder, is on the chair, third from proper.Credit score: FutureHouse

Tay says that PaperQA2 and one other device referred to as Undermind take longer than standard engines like google to return outcomes — minutes moderately than seconds — as a result of they conduct more-sophisticated searches, utilizing the outcomes of the preliminary search to trace down different citations and key phrases, for instance. “That every one provides as much as being very computationally costly and gradual, however offers a considerably increased high quality search,” he says.

Systematic problem

Narrative summaries of the literature are onerous sufficient to supply, however systematic opinions are even worse. They will take individuals many months and even years to finish2.

A scientific assessment includes no less than 25 cautious steps, based on a breakdown from Glasziou’s group. After combing by way of the literature, a researcher should filter their longlist to search out probably the most pertinent papers, then extract knowledge, display screen research for potential bias and synthesize the outcomes. (Many of those steps are accomplished in duplicate by one other researcher to test for inconsistencies.) This laborious methodology — which is meant to be rigorous, clear and reproducible — is taken into account worthwhile in drugs, as an example, as a result of clinicians use the outcomes to information vital choices about treating sufferers.

ChatGPT-like AIs are coming to main science engines like google

In 2019, earlier than ChatGPT got here alongside, Glasziou and his colleagues got down to obtain a world file in science: a scientific assessment in two weeks. He and others, together with Marshall and Thomas, had already developed pc instruments to scale back the time concerned. The menu of software program out there by that point included RobotSearch, a machine-learning mannequin educated to rapidly establish randomized trials from a set of research. RobotReviewer, one other AI system, helps to evaluate whether or not a examine is susceptible to bias as a result of it was not adequately blinded, as an example. “All of these are vital little instruments in shaving down the time of doing a scientific assessment,” Glasziou says.

The clock began at 9:30 a.m. on Monday 21 January 2019. The group cruised throughout the road at lunchtime on Friday 1 February, after a complete of 9 working days3. “I used to be excited,” says epidemiologist Anna Mae Scott on the College of Oxford, UK, who led the examine whereas at Bond College; everybody celebrated with cake. Since then, the group has pared its file down to 5 days.

May the method get quicker? Different researchers have been working to automate points of systematic opinions, too. In 2015, Glasziou based the Worldwide Collaboration for the Automation of Systematic Evaluations, a distinct segment group that, fittingly, has produced a number of systematic opinions about instruments for automating systematic opinions4. Besides, “not very many [tools] have seen widespread acceptance”, says Marshall. “It’s only a query of how mature the expertise is.”

Elicit is one firm that claims its device helps researchers with systematic opinions, not simply narrative ones. The agency doesn’t supply systematic opinions on the push of a button, says Brady, however its system does automate a number of the steps — together with screening papers and extracting knowledge and insights. Brady says that almost all researchers who use it for systematic opinions have uploaded related papers they discover utilizing different search strategies.

Systematic-review aficionados fear that AI instruments are susceptible to failing to fulfill two important standards of the research: transparency and reproducibility. “If I can’t see the strategies used, then it’s not a scientific assessment, it’s merely a assessment article,” says Justin Clark, who builds assessment automation instruments as a part of Glasziou’s group. Brady says that the papers that reviewers add to Elicit “are a wonderful, clear file” of their beginning literature. As for reproducibility: “We don’t assure that our outcomes are all the time going to be an identical throughout repeats of the identical steps, however we intention to make it so — inside purpose,” he says, including that transparency and reproducibility will probably be vital because the agency improves its system.

Specialists in reviewing say they want to see extra revealed evaluations of the accuracy and reproducibility of AI programs which were designed to assist produce literature opinions. “Constructing cool instruments and making an attempt stuff out is basically good enjoyable,” says Clark. “Doing a hardcore evaluative examine is a number of onerous work.”

Audit AI search instruments now, earlier than they skew analysis

Earlier this yr, Clark led a scientific assessment of research that had used generative AI instruments to assist with systematic reviewing. He and his group discovered solely 15 revealed research through which the AI’s efficiency had been adequately in contrast with that of an individual. The outcomes, which haven’t but been revealed or peer reviewed, recommend that these AI programs can extract some knowledge from uploaded research and assess the danger of bias of medical trials. “It appears to do OK with studying and assessing papers,” Clark says, “however it did very badly in any respect these different duties”, together with designing and conducting an intensive literature search. (Present pc software program can already do the ultimate step of synthesizing knowledge utilizing a meta-analysis.)

Glasziou and his group are nonetheless making an attempt to shave day without work their reviewing file by way of improved instruments, which can be found on an internet site they name the Proof Assessment Accelerator. “It received’t be one massive factor. It’s that yearly you’ll get quicker and quicker,” Glasziou predicts. In 2022, as an example, the group launched a computerized device referred to as Strategies Wizard, which asks customers a collection of questions on their strategies after which writes a protocol for them with out utilizing AI.

Rushed opinions?

Automating the synthesis of knowledge additionally comes with dangers. Researchers have identified for years that many systematic opinions are redundant or of poor high quality5, and AI might make these issues worse. Authors would possibly knowingly or unknowingly use AI instruments to race by way of a assessment that doesn’t comply with rigorous procedures, or which incorporates poor-quality work, and get a deceptive end result.

Against this, says Glasziou, AI might additionally encourage researchers to do a fast test of beforehand revealed literature after they wouldn’t have bothered earlier than. “AI might elevate their sport,” he says. And Brady says that, in future, AI instruments might assist to flag and filter out poor-quality papers by in search of telltale indicators equivalent to P-hacking, a type of knowledge manipulation.

Glasziou sees the scenario as a steadiness of two forces: AI instruments might assist scientists to supply high-quality opinions, however may additionally gas the speedy era of substandard ones. “I don’t know what the online affect goes to be on the revealed literature,” he says.

Some individuals argue that the power to synthesize and make sense of the world’s information shouldn’t lie solely within the fingers of opaque, profit-making firms. Clark needs to see non-profit teams construct and thoroughly take a look at AI instruments. He and different researchers welcomed the announcement from two UK funders final month that they’re investing greater than US$70 million in evidence-synthesis programs. “We simply wish to be cautious and cautious,” Clark says. “We wish to ensure that the solutions that [technology] helps to offer to us are right.”