Since its introduction, the NVIDIA Hopper structure has reworked the AI and high-performance computing (HPC) panorama, serving to enterprises, researchers and builders sort out the world’s most advanced challenges with greater efficiency and higher vitality effectivity.

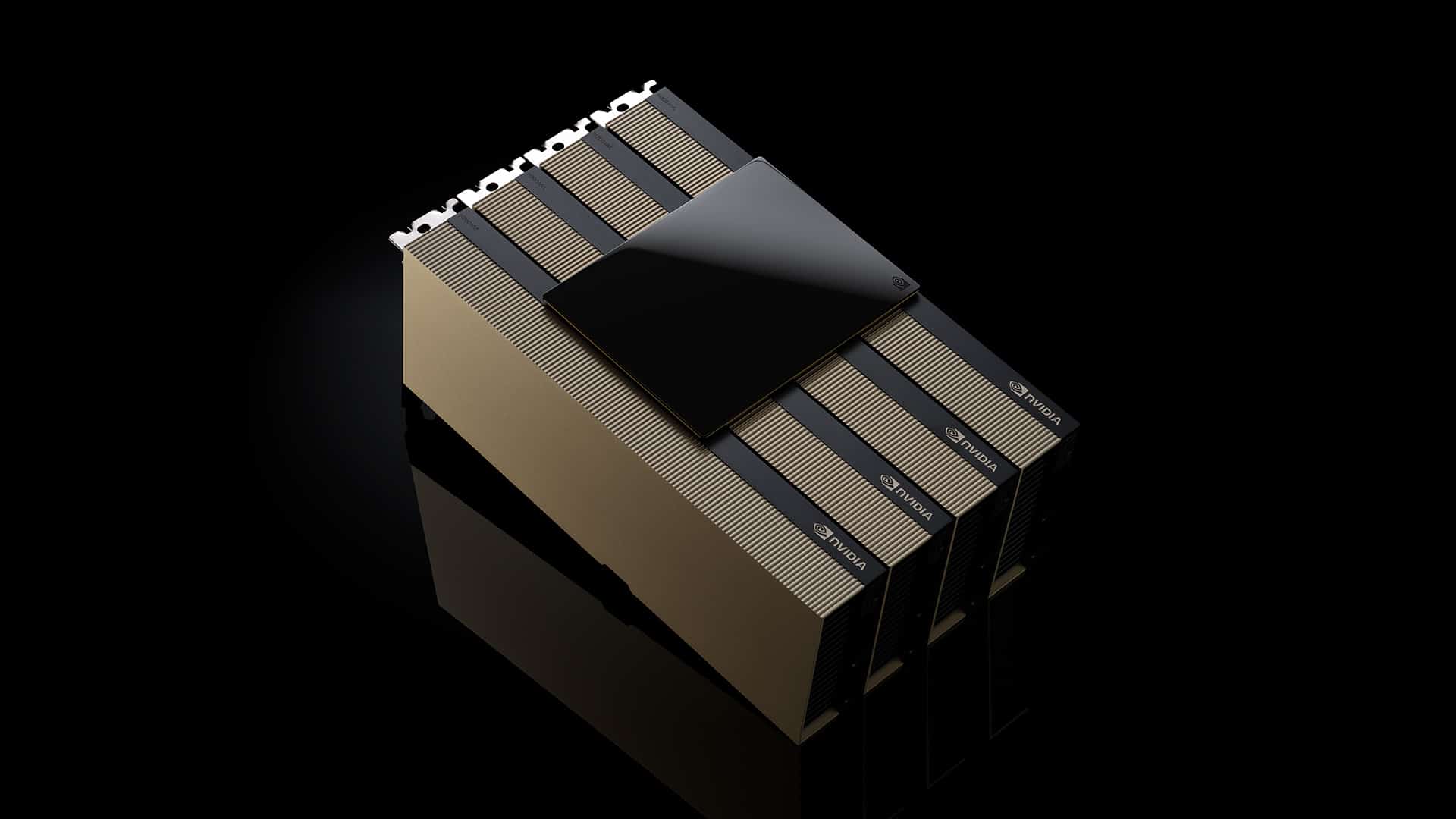

Through the Supercomputing 2024 convention, NVIDIA introduced the provision of the NVIDIA H200 NVL PCIe GPU — the newest addition to the Hopper household. H200 NVL is right for organizations with information facilities searching for lower-power, air-cooled enterprise rack designs with versatile configurations to ship acceleration for each AI and HPC workload, no matter dimension.

In response to a latest survey, roughly 70% of enterprise racks are 20kW and beneath and use air cooling. This makes PCIe GPUs important, as they supply granularity of node deployment, whether or not utilizing one, two, 4 or eight GPUs — enabling information facilities to pack extra computing energy into smaller areas. Corporations can then use their present racks and choose the variety of GPUs that most accurately fits their wants.

Enterprises can use H200 NVL to speed up AI and HPC functions, whereas additionally bettering vitality effectivity via decreased energy consumption. With a 1.5x reminiscence enhance and 1.2x bandwidth enhance over NVIDIA H100 NVL, firms can use H200 NVL to fine-tune LLMs inside a number of hours and ship as much as 1.7x sooner inference efficiency. For HPC workloads, efficiency is boosted as much as 1.3x over H100 NVL and a pair of.5x over the NVIDIA Ampere structure technology.

Complementing the uncooked energy of the H200 NVL is NVIDIA NVLink expertise. The most recent technology of NVLink offers GPU-to-GPU communication 7x sooner than fifth-generation PCIe — delivering greater efficiency to satisfy the wants of HPC, giant language mannequin inference and fine-tuning.

The NVIDIA H200 NVL is paired with highly effective software program instruments that allow enterprises to speed up functions from AI to HPC. It comes with a five-year subscription for NVIDIA AI Enterprise, a cloud-native software program platform for the event and deployment of manufacturing AI. NVIDIA AI Enterprise contains NVIDIA NIM microservices for the safe, dependable deployment of high-performance AI mannequin inference.

Corporations Tapping Into Energy of H200 NVL

With H200 NVL, NVIDIA offers enterprises with a full-stack platform to develop and deploy their AI and HPC workloads.

Clients are seeing important affect for a number of AI and HPC use circumstances throughout industries, similar to visible AI brokers and chatbots for customer support, buying and selling algorithms for finance, medical imaging for improved anomaly detection in healthcare, sample recognition for manufacturing, and seismic imaging for federal science organizations.

Dropbox is harnessing NVIDIA accelerated computing for its companies and infrastructure.

“Dropbox handles giant quantities of content material, requiring superior AI and machine studying capabilities,” mentioned Ali Zafar, VP of Infrastructure at Dropbox. “We’re exploring H200 NVL to repeatedly enhance our companies and produce extra worth to our clients.”

The College of New Mexico has been utilizing NVIDIA accelerated computing in numerous analysis and educational functions.

“As a public analysis college, our dedication to AI allows the college to be on the forefront of scientific and technological developments,” mentioned Prof. Patrick Bridges, director of the UNM Middle for Superior Analysis Computing. “As we shift to H200 NVL, we’ll be capable to speed up a wide range of functions, together with information science initiatives, bioinformatics and genomics analysis, physics and astronomy simulations, local weather modeling and extra.”

H200 NVL Accessible Throughout Ecosystem

Dell Applied sciences, Hewlett Packard Enterprise, Lenovo and Supermicro are anticipated to ship a variety of configurations supporting H200 NVL.

Moreover, H200 NVL might be out there in platforms from Aivres, ASRock Rack, ASUS, GIGABYTE, Ingrasys, Inventec, MSI, Pegatron, QCT, Wistron and Wiwynn.

Some methods are based mostly on the NVIDIA MGX modular structure, which allows pc makers to rapidly and cost-effectively construct an unlimited array of knowledge heart infrastructure designs.

Platforms with H200 NVL might be out there from NVIDIA’s world methods companions starting in December. To enhance availability from main world companions, NVIDIA can be growing an Enterprise Reference Structure for H200 NVL methods.

The reference structure will incorporate NVIDIA’s experience and design ideas, so companions and clients can design and deploy high-performance AI infrastructure based mostly on H200 NVL at scale. This contains full-stack {hardware} and software program suggestions, with detailed steering on optimum server, cluster and community configurations. Networking is optimized for the very best efficiency with the NVIDIA Spectrum-X Ethernet platform.

NVIDIA applied sciences might be showcased on the showroom ground at SC24, happening on the Georgia World Congress Middle via Nov. 22. To be taught extra, watch NVIDIA’s particular tackle.

See discover concerning software program product info.