Synthetic-intelligence fashions are capable of translate an experimental technique into code that runs a liquid-handling robotic.Credit score: Getty

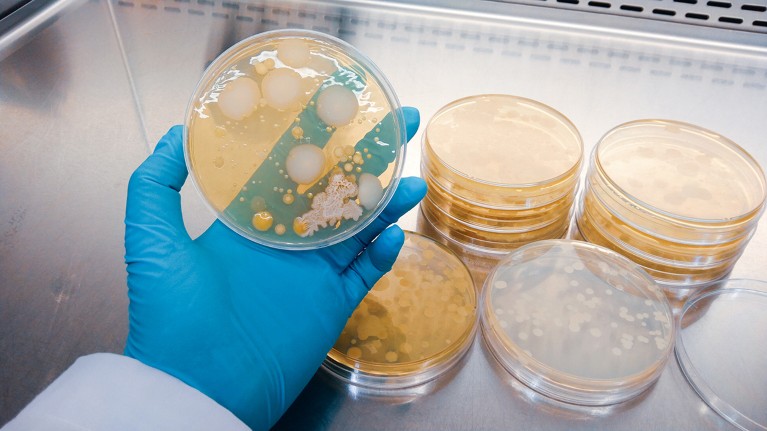

Since July, researchers at Los Alamos Nationwide Laboratory in New Mexico have been assessing how the unreal intelligence (AI) mannequin GPT-4o can help people with duties in organic analysis. Within the evaluations — that are being carried out to advance improvements within the biosciences, in addition to to know potential dangers — people ask GPT-4o varied questions to assist them obtain customary experimental duties. These embody sustaining and propagating cells in vitro; separating cells and different elements in a pattern utilizing a centrifuge; and introducing overseas genetic materials into a bunch organism.

In these assessments, researchers at Los Alamos are collaborating with OpenAI, the corporate in San Francisco, California, that developed GPT-4o. The checks are amongst a handful of efforts aiming to handle potential biosafety and biosecurity points posed by AI fashions since OpenAI made ChatGPT, a chatbot primarily based on giant language fashions (LLMs), publicly obtainable in November 2022.

ChatGPT one yr on: who’s utilizing it, how and why?

We argue that rather more is required.

Three of us examine how scientific and technological improvements can have an effect on public well being and well being safety on the Johns Hopkins Middle for Well being Safety in Baltimore, Maryland. Two of us analysis and develop options to public-policy challenges on the non-profit assume tank RAND, which is headquartered in Santa Monica, California.

Though we see the promise of AI-assisted organic analysis to enhance human well being and well-being, this expertise remains to be unpredictable and presents doubtlessly important dangers. We urge governments to maneuver quicker to make clear which dangers warrant most consideration, and to find out what satisfactory testing and mitigation measures for these potential dangers ought to entail. In brief, we name for a extra deliberate strategy that attracts on many years of presidency and scientific expertise in lowering pandemic-scale dangers in organic analysis1.

Experiments at pace

GPT-4o is a ‘multimodal’ LLM. It could actually settle for textual content, audio, picture and video prompts, and has been educated on huge portions of those codecs scraped from the Web and elsewhere — information that nearly definitely embody tens of millions of peer-reviewed research in organic analysis. Its skills are nonetheless being examined, however earlier work hints at its doable makes use of within the life sciences. For example, in 2023, Microsoft (a significant investor in OpenAI) printed evaluations of GPT-4, an earlier model of GPT-4o, exhibiting that the LLM might present step-by-step directions for utilizing the protein-design software Rosetta to design an antibody that may bind to the spike protein of the coronavirus SARS-CoV-2. It might additionally translate an experimental protocol into code for a robotic that may deal with liquids — a functionality that’s “anticipated to drastically pace up the automation of biology experiments”2.

Additionally in 2023, researchers at Carnegie Mellon College in Pittsburgh, Pennsylvania, confirmed {that a} system utilizing GPT-4, referred to as Coscientist, might design, plan and carry out advanced experiments, equivalent to chemical syntheses. On this case, the system was capable of search paperwork, write code and management a robotic lab system3. And earlier this month, researchers at Stanford College in California and the Chan Zuckerberg Biohub in San Francisco launched a Digital Lab — a crew of LLM brokers powered by GPT4o that designed potent SARS-CoV-2 nanobodies (a sort of antibody) with minimal human enter4.

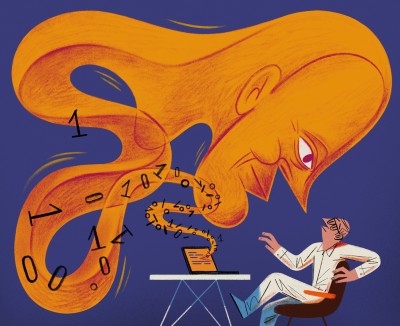

Automating lab protocols utilizing AI methods would enhance scalability and scale back prices.Credit score: Nattapon Malee/Getty

OpenAI launched GPT-4o in Could, and is predicted to launch its successor, GPT-5, within the coming months. Most different main AI firms have equally improved their fashions. To date, assessments have targeted primarily on particular person LLMs working in isolation. However AI builders anticipate mixtures of AI instruments, together with LLMs, robotics and automation applied sciences, to have the ability to conduct experiments — equivalent to these involving the manipulation, design and synthesis of drug candidates, toxins or stretches of DNA — with minimal human involvement.

These advances promise to rework biomedical analysis. However they might additionally convey important biosafety and biosecurity dangers5. Certainly, a number of governments worldwide have taken steps to attempt to mitigate such dangers of cutting-edge AI fashions (see ‘Racing to maintain up’). In 2023, for instance, the US authorities secured voluntary commitments from 15 main AI firms to handle the dangers posed by the expertise. Later that yr, US President Joe Biden signed an Govt Order on the Secure, Safe, and Reliable Growth and Use of Synthetic Intelligence. Amongst different issues, this requires firms to inform the federal government earlier than they launch fashions which are educated on “primarily organic sequence information” and that use “a amount of computing energy higher than 1023 integer or floating-point operations.”

The UK, the US, Canada, Japan and Singapore have now established authorities institutes targeted on AI security to develop requirements and instruments for threat administration. Different international locations have dedicated to doing the identical, with these 5 nations and Australia, France, Kenya and South Korea making up the founding members of an Worldwide Community of AI Security Institutes, along with the European Union, which has established a security unit in its AI Workplace.

These are spectacular accomplishments in a short while body, and must be supported. How a lot threat discount has been achieved from all this exercise, nonetheless, is unclear — partially as a result of a lot of the work of those establishments has not but been made public.

Security testing

Individually from issues of threat, some builders of AI fashions have tried to find out what elements have an effect on their fashions’ efficiency probably the most. One main speculation follows a scaling regulation: LLM efficiency improves with will increase in mannequin measurement, data-set measurement and computational energy6. That is partly what influenced the US authorities’s resolution to require AI firms to inform the Division of Commerce earlier than releasing fashions that use a certain quantity of computing energy. However scaling legal guidelines is not going to reliably predict what capabilities might come up and when.

AI-generated photos threaten science — right here’s how researchers hope to identify them

Within the meantime — within the absence of presidency insurance policies on what dangers urgently want addressing and easy methods to mitigate them — firms equivalent to OpenAI and Anthropic (additionally primarily based in San Francisco) have adopted analysis protocols that they’ve developed in-house. (Many firms with AI methods, together with Amazon in Seattle, Washington, Cohere in Canada, Mistral in Paris and xAI in San Francisco, haven’t but made biosecurity evaluations of their fashions publicly obtainable1.) In these circumstances, security testing has entailed automated assessments, together with these utilizing multiple-choice questions (see go.nature.com/4tgj3p9); research during which people try and elicit dangerous capabilities from the mannequin being evaluated (often known as purple teaming; go.nature.com/3z4kg2p); and managed trials during which people or teams are requested to carry out a job with or with out entry to an AI mannequin (uplift research; go.nature.com/3unhgmr).

In our view, even when firms are conducting their very own evaluations, such assessments are problematic. Typically, they’re too narrowly targeted on the event of bioweapons. For example, the expertise firm Meta carried out research to see whether or not its open-source LLM Llama 3.1 might enhance the proliferation of “chemical and organic weapons” (see go.nature.com/3reyqgs). Likewise, the AI firm Anthropic has assessed whether or not its mannequin Claude might reply “superior bioweapon-relevant questions” (see go.nature.com/48u8tyj).

AI methods would possibly allow the design of virus subtypes that evade immunity.Credit score: Getty

The issue with this strategy is that there isn’t a publicly seen, agreed definition of ‘bioweapon’. When utilized in isolation, this time period doesn’t differentiate between smaller-scale dangers and large-scale ones. Numerous pathogens and toxins might plausibly be used as weapons, and lots of are listed in worldwide non-proliferation agreements (see go.nature.com/3utzbw8). However few are more likely to result in the sorts of hurt that would have an effect on tens of millions of individuals. Additionally, many pathogens, equivalent to influenza and SARS-CoV-2, could cause extreme societal disruption, however usually are not thought-about bioweapons.

One other concern is that evaluations have tended to focus an excessive amount of on primary lab duties. Within the assessments being carried out by OpenAI in collaboration with Los Alamos researchers, for instance, the capabilities being examined could possibly be wanted to develop one thing nefarious, equivalent to a crop-destroying pathogen. However they’re additionally important steps for useful life-sciences analysis that don’t — on their very own — present trigger for alarm.

Added to all this, the evaluations carried out thus far are resource-intensive and relevant primarily to LLMs. They often contain a question-and-answer strategy that requires people to pose the questions or evaluate a mannequin’s solutions. Lastly, as talked about earlier, evaluators want to look at how a number of AI methods function in live performance7 — one thing that’s at present being requested by the US authorities however missed in business, as a result of firms are incentivized to check solely their very own fashions.

Easy methods to prioritize

So what does a greater strategy seem like?

On condition that assets are finite and progress in AI is fast, we urge governments and AI builders to focus first on mitigating these harms that would consequence within the biggest lack of life and disruption to society. Outbreaks involving transmissible pathogens belong to this class — whether or not these pathogens have an effect on people, non-human animals or vegetation.

In our view, builders of AI fashions — working with security and safety consultants — have to specify which AI capabilities are most probably to result in this type of pandemic-scale hurt. A listing of ‘capabilities of concern’ that varied consultants usually concur on, even when they disagree on some points, gives a extra sturdy place to begin than does an inventory generated by particular person firms or specialist tutorial teams.

Generative AI might revolutionize well being care — however not if management is ceded to huge tech

As a proof of precept, in June, we gathered 17 consultants in AI, computational biology, infectious ailments, public well being, biosecurity and science coverage for a one-day hybrid workshop close to Washington DC. The purpose was to find out what AI-enabled capabilities in organic analysis can be most probably to allow a pandemic stage of dying and disruption — whether or not brought on by a pandemic in people or a widespread animal or crop illness. Views amongst workshop contributors differed. Nonetheless, the vast majority of the group members rated 7 AI capabilities from an inventory of 17 as being “reasonably doubtless” or “very doubtless” to allow new world outbreaks of human, animal or plant pathogens. These are:

Optimizing and producing designs for brand new virus subtypes that may evade immunity. A examine8 exhibiting that an AI mannequin can generate viable designs for subtypes of SARS-CoV-2 that may escape human immunity was printed in Nature in 2023.

Designing traits of a pathogen to allow its unfold inside or between species. AI methods would possibly permit the design of proteins, genes or genomes that generate traits in pathogens that have an effect on their transmissibility. To date, human-induced genetic alterations to pathogens haven’t been evolutionarily sturdy, however AI builders are engaged on fashions that may design genetic modifications that persist9.

Combining AI instruments with robotics will allow experiments involving biohazards to be carried out with minimal human involvement.Credit score: Invoice Varie/Getty

Producing huge quantities of information on traits that decide how simply viruses could be transmitted — which might, in flip, be used to coach different AI fashions. At present, figuring out which traits assist a viral pathogen to switch from one cell to a different, or from one host to a different, entails time-intensive wet-lab strategies. Trade and tutorial researchers try to develop autonomous robotics and different AI methods that may carry out a few of these steps.

Aiding or finishing protocols for the de novo synthesis of human, animal or plant pathogens. Industrial entities equivalent to contract analysis organizations present analysis providers on a contractual foundation, however the step-by-step protocols they carry out usually contain human labour. There’s now curiosity in automating a few of this utilizing AI methods and brokers to enhance scalability and scale back prices2,10.

Designing genes, genetic pathways or proteins that convert non-human animal pathogens into human pathogens. Most infectious ailments in people come up from non-human animals. (Some ailments that started in animals can mutate into strains that infect solely people, as occurred with HIV.) To date, it has been arduous to foretell which genes, strains or proteins enhance the probability of a pathogen being transferred from an animal to a human. To enhance such predictions, AI builders would possibly construct methods that may combine huge portions of pathogen genomic information with info on the traits that have an effect on transmissibility. (At present, there are inadequate coaching information to do that, and gathering these poses its personal dangers.)

Science and the brand new age of AI: a Nature particular

Designing proteins, genes or genetic pathways in pathogens in order that they selectively hurt sure human populations. AI methods that combine human genomic information with pathogen information would possibly be capable to discern — for good or hurt — why explicit human populations are roughly vulnerable to a pathogen.

Modelling how ailments unfold utilizing pathogen genomic information. Epidemiological modelling refers back to the computational simulation of illness outbreaks, primarily based on the traits of the pathogen and the human inhabitants. AI might make such forecasting simpler and extra correct. Future AI methods would possibly even be capable to present tough estimates on unfold on the idea of pathogen genomic info alone.

Steerage wanted

All of those AI capabilities are being studied for his or her potential useful functions — as an illustration, to information the design of vaccines. Authorities insurance policies that protect such advantages whereas mitigating dangers, or that present steering on what the safer alternate options could be, are due to this fact essential.

However solely as soon as it’s clear which AI capabilities pose pandemic-scale biosafety and biosecurity dangers can efficient evaluations for them be developed. In different phrases, there should be a robust correlation between no matter functionality is being examined and the probability of a high-risk occasion occurring. If such a functionality is then detected by security testing, focused efforts could be made to cut back the dangers.

What ChatGPT and generative AI imply for science

Makes an attempt to elicit dangerous capabilities from AI fashions throughout a testing section might generate totally different outcomes relying on the strategy used and the extent of effort made. To be efficient, then, checks of capabilities should be sufficiently dependable. Additionally, evaluations must be undertaken by specialists who’ve a deep data of the expertise, however who usually are not beholden to the corporate that developed the AI system or methods being evaluated. At present, it is a appreciable problem, as a result of those that finest perceive easy methods to take a look at AI fashions had been typically concerned of their improvement. However new authorities establishments, such because the US and UK AI security institutes, can construct unbiased experience — so long as they proceed to be adequately funded and supported. These two institutes have already recruited leaders from high AI firms.

Some have argued — fairly — that the time and assets at present required for AI biosecurity testing places such checks out of attain for smaller AI firms and tutorial labs. In its current GPT-4o analysis, OpenAI labored with greater than 100 exterior red-teamers to attract out the mannequin’s potential dangerous capabilities. If extra of the steps concerned grow to be automated, nonetheless, security checks of AI methods might grow to be easy, routine and inexpensive. Such a shift has occurred in different fields equivalent to cybersecurity, during which software program instruments have changed human hackers.

On 20–21 November, representatives from international locations which have established AI Security institutes, or which are dedicated to doing so, are gathering in San Francisco to hash out how firms would possibly — in follow — develop AI methods in a secure and moral manner. And in February, heads of state and business leaders will talk about easy methods to construct belief in AI “primarily based on an goal scientific consensus on security and safety points” on the world AI Motion Summit in Paris.

All of that is encouraging. However step one is to construct an goal scientific consensus by proactive processes that have interaction various — and unbiased — consultants.