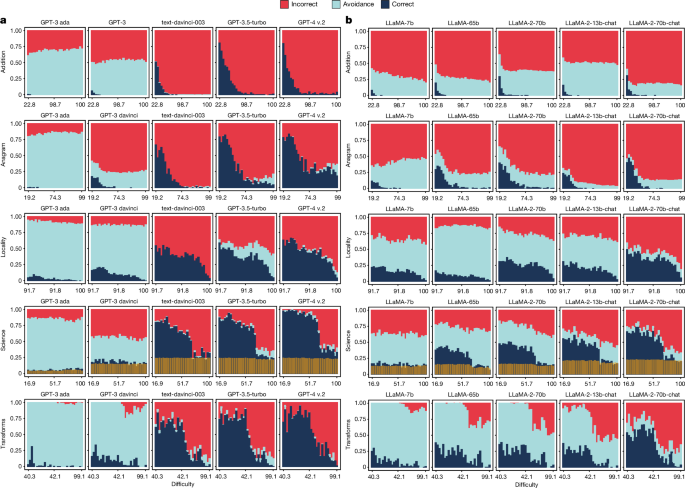

Determine 2 exhibits the outcomes of a collection of fashions within the GPT and LLaMA households, more and more scaled up, with the shaped-up fashions on the best, for the 5 domains: ‘addition’, ‘anagram’, ‘locality’, ‘science’ and ‘transforms’. We see that the proportion of right responses will increase for scaled-up, shaped-up fashions, as we method the final column. That is an anticipated consequence and holds persistently for the remainder of the fashions, proven in Prolonged Information Fig. 1 (GPT), Prolonged Information Fig. 2 (LLaMA) and Supplementary Fig. 14 (BLOOM household).

The values are cut up by right, avoidant and incorrect outcomes. For every mixture of mannequin and benchmark, the result’s the common of 15 immediate templates (see Supplementary Tables 1 and 2). For every benchmark, we present its chosen intrinsic problem, monotonically calibrated to human expectations on the x axis for ease of comparability between benchmarks. The x axis is cut up into 30 equal-sized bins, for which the ranges have to be taken as indicative of various distributions of perceived human problem throughout benchmarks. For ‘science’, the clear yellow bars on the backside symbolize the random guess likelihood (25% of the non-avoidance solutions). Plots for all GPT and LLaMA fashions are offered in Prolonged Information Figs. 1 and 2 and for the BLOOM household in Supplementary Fig. 14.

Allow us to deal with the evolution of correctness with respect to problem. For ‘addition’, we use the variety of carry operations within the sum (fcry). For ‘anagram’, we use the variety of letters of the given anagram (flet). For ‘locality’, we use the inverse of metropolis reputation (fpop). For ‘science’, we use human problem (fhum) straight. For ‘transforms’, we use a mix of enter and output phrase counts and Levenshtein distance (fw+l) (Desk 2). As we talk about within the Strategies, these are chosen nearly as good proxies of human expectations about what is tough or straightforward in accordance with human research S1 (see Supplementary Word 6). As the issue will increase, correctness noticeably decreases for all of the fashions. To verify this, Supplementary Desk 8 exhibits the correlations between correctness and the proxies for human problem. Apart from BLOOM for addition, all of them are excessive.

Nevertheless, regardless of the predictive energy of human problem metrics for correctness, full reliability isn’t even achieved at very low problem ranges. Though the fashions can remedy extremely difficult cases, additionally they nonetheless fail at quite simple ones. That is particularly evident for ‘anagram’ (GPT), ‘science’ (LLaMA) and ‘locality’ and ‘transforms’ (GPT and LLaMA), proving the presence of an issue discordance phenomenon. The discordance is noticed throughout all of the LLMs, with no obvious enchancment by the methods of scaling up and shaping up, confirmed by the aggregated metric proven in Fig. 1. That is particularly the case for GPT-4, in contrast with its predecessor GPT-3.5-turbo, primarily rising efficiency on cases of medium or excessive problem with no clear enchancment for straightforward duties. For the LLaMA household, no mannequin achieves 60% correctness on the easiest problem degree (discounting 25% random guess for ‘science’). The one exception is a area with low problem for ‘science’ with GPT-4, with virtually good outcomes as much as medium problem ranges.

Specializing in the development throughout fashions, we additionally see one thing extra: the proportion of incorrect outcomes will increase markedly from the uncooked to the shaped-up fashions, as a consequence of considerably decreasing avoidance (which just about disappears for GPT-4). The place the uncooked fashions have a tendency to present non-conforming outputs that can’t be interpreted as a solution (Supplementary Fig. 16), shaped-up fashions as an alternative give seemingly believable however mistaken solutions. Extra concretely, the realm of avoidance in Fig. 2 decreases drastically from GPT-3 ada to text-davinci-003 and is changed with more and more extra incorrect solutions. Then, for GPT-3.5-turbo, avoidance will increase barely, solely to taper off once more with GPT-4. This variation from avoidant to incorrect solutions is much less pronounced for the LLaMA household, however nonetheless clear when evaluating the primary with the final fashions. That is summarized by the prudence indicators in Fig. 1, displaying that the shaped-up fashions carry out worse when it comes to avoidance. This doesn’t match the expectation that more moderen LLMs would extra efficiently keep away from answering exterior their working vary. In our evaluation of the forms of avoidance (see Supplementary Word 15), we do see non-conforming avoidance altering to epistemic avoidance for shaped-up fashions, which is a optimistic development. However the sample isn’t constant, and can’t compensate for the overall drop in avoidance.

Wanting on the development over problem, the essential query is whether or not avoidance will increase for tougher cases, as could be acceptable for the corresponding decrease degree of correctness. Determine 2 exhibits that this isn’t the case. There are just a few pockets of correlation and the correlations are weak. That is the case for the final three GPT fashions for ‘anagram’, ‘locality’ and ‘science’ and some LLaMA fashions for ‘anagram’ and ‘science’. In another instances, we see an preliminary improve in avoidance however then stagnation at larger problem ranges. The share of avoidant solutions not often rises faster than the proportion of incorrect ones. The studying is evident: errors nonetheless grow to be extra frequent. This represents an involution in reliability: there isn’t a problem vary for which errors are unbelievable, both as a result of the questions are really easy that the mannequin by no means fails or as a result of they’re so troublesome that the mannequin all the time avoids giving a solution.

We subsequent questioned whether or not it’s attainable that this lack of reliability could also be motivated by some prompts being particularly poor or brittle, and whether or not we might discover a safe area for these explicit prompts. We analyse immediate sensitivity disaggregating by correctness, avoidance and incorrectness, utilizing the prompts in Supplementary Tables 1 and 2. A direct disaggregation will be present in Supplementary Fig. 1, displaying that shaped-up fashions are, usually, much less delicate to immediate variation. But when we have a look at the evolution towards problem, as proven in Prolonged Information Figs. 3 and 4 for essentially the most consultant fashions of the GPT and LLaMA households, respectively (all fashions are proven in Supplementary Figs. 12, 13 and 15), we observe a giant distinction between the uncooked fashions (represented by GPT-3 davinci) and different fashions of the GPT household, whereas the LLaMA household underwent a extra timid transformation. The uncooked GPT and all of the LLaMA fashions are extremely delicate to the prompts, even within the case of extremely unambiguous duties equivalent to ‘addition’. Issue doesn’t appear to have an effect on sensitivity very a lot, and for straightforward cases, we see that the uncooked fashions (significantly, GPT-3 davinci and non-chat LLaMA fashions) have some capability that’s unlocked solely by rigorously chosen prompts. Issues change considerably for the shaped-up fashions, the final six GPT fashions and the final three LLaMA (chat) fashions, that are extra steady, however with pockets of variability throughout problem ranges.

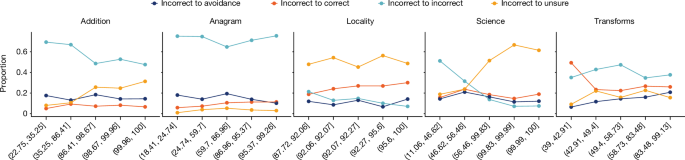

General, these totally different ranges of immediate sensitivity throughout problem ranges have essential implications for customers, particularly as human research S2 exhibits that supervision isn’t in a position to compensate for this unreliability (Fig. 3). Wanting on the correct-to-incorrect kind of error in Fig. 3 (crimson), if the person expectations on problem have been aligned with mannequin outcomes, we should always have fewer instances on the left space of the curve (straightforward cases), and people needs to be higher verified by people. This is able to result in a protected haven or working space for these cases which are thought to be straightforward by people, with low error from the mannequin and low supervision error from the human utilizing the response from the mannequin. Nevertheless, sadly, this occurs just for straightforward additions and for a wider vary of anagrams, as a result of verification is mostly easy for these two datasets.

Within the survey (Supplementary Fig. 4), individuals have to find out whether or not the output of a mannequin is right, avoidant or incorrect (or have no idea, represented by the ‘uncertain’ choice within the questionnaire). Issue (x axis) is proven in equal-sized bins. We see only a few areas the place the damaging error (incorrect being thought-about right by individuals) is sufficiently low to think about a protected working area.

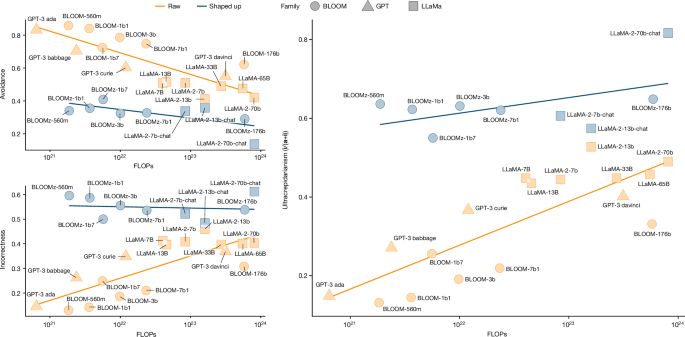

Our observations about GPT and LLaMA additionally apply to the BLOOM household (Supplementary Word 11). To disentangle the results of scaling and shaping, we conduct an ablation research utilizing LLaMA and BLOOM fashions of their shaped-up variations (named chat and z, respectively) and the uncooked variations, with the benefit that every pair has equal pre-training information and configuration. We additionally embrace all different fashions with identified compute, such because the non-instruct GPT fashions. We take the identical information summarized in Fig. 1 (Prolonged Information Desk 1) and carry out a scaling evaluation utilizing the FLOPs (floating-point operations) column in Desk 1. FLOPs info often captures each information and parameter depend if fashions are effectively dimensioned40. We separate the traits between uncooked and shaped-up fashions. The truth that correctness will increase with scale has been systematically proven within the literature of scaling legal guidelines1,40. With our information and three-outcome labelling, we are able to now analyse the unexplored evolution of avoidance and incorrectness (Fig. 4, left).

The plot makes use of a logarithmic scale for FLOPs. The main focus is on avoidance (a; high left), incorrectness (i; backside left) and ultracrepidarianism (i/(a + i); proper)—the proportion of incorrect over each avoidant and incorrect solutions.

As evident in Fig. 4, avoidance is clearly a lot decrease for shaped-up fashions (blue) than for uncooked fashions (orange), however incorrectness is far larger. However even when correctness will increase with scale, incorrectness doesn’t lower; for the uncooked fashions, it will increase significantly. That is stunning, and it turns into extra evident after we analyse the proportion of incorrect responses for these that aren’t right in (i/(a + i) in our notation; Fig. 4 (proper)). We see a big improve within the proportion of errors, with fashions turning into extra ultracrepidarian (more and more giving a non-avoidant reply after they have no idea, consequently failing proportionally extra).

We are able to now take all these observations and traits into consideration, in tandem with the expectations of an everyday human person (research S1) and the restricted human functionality for verification and supervision (research S2). This results in a re-understanding of the reliability evolution of LLMs, organized in teams of two findings for problem discordance (F1a and F1b), activity avoidance (F2a and F2b) and immediate sensitivity (F3a and F3b):

F1a—human problem proxies function beneficial predictors for LLM correctness. Proxies of human problem are negatively correlated with correctness, implying that for a given activity, people themselves can have approximate expectations for the correctness of an occasion. Relevance: this predictability is essential as different success estimators when mannequin self-confidence is both not obtainable or markedly weakened (for instance, RLHF ruining calibration3,41).

F1b—enchancment occurs at onerous cases as issues with straightforward cases persist, extending the issue discordance. Present LLMs clearly lack straightforward working areas with no error. In truth, the most recent fashions of all of the households are usually not securing any dependable working space. Relevance: that is particularly regarding in functions that demand the identification of working situations with excessive reliability.

F2a—scaling and shaping presently change avoidance for extra incorrectness. The extent of avoidance is determined by the mannequin model used, and in some instances, it vanishes totally, with incorrectness taking essential proportions of the waning avoidance (that’s, ultracrepidarianism). Relevance: this elimination of the buffer of avoidance (deliberately or not) might lead customers to initially overtrust duties they don’t command, however might trigger them to be let down in the long run.

F2b—avoidance doesn’t improve with problem, and rejections by human supervision don’t both. Mannequin errors improve with problem, however avoidance doesn’t. Customers can acknowledge these high-difficulty cases however nonetheless make frequent incorrect-to-correct supervision errors. Relevance: customers don’t sufficiently use their expectations on problem to compensate for rising error charges in high-difficulty areas, indicating over-reliance.

F3a—scaling up and shaping up might not free customers from immediate engineering. Our observations point out that there’s a rise in prompting stability. Nevertheless, fashions differ of their ranges of immediate sensitivity, and this varies throughout problem ranges. Relevance: customers might battle to search out prompts that profit avoidance over incorrect solutions. Human supervision doesn’t repair these errors.

F3b—enchancment in immediate efficiency isn’t monotonic throughout problem ranges. Some prompts don’t observe the monotonic development of the common, are much less conforming with the issue metric and have fewer errors for onerous cases. Relevance: this non-monotonicity is problematic as a result of customers could also be swayed by prompts that work effectively for troublesome cases however concurrently get extra incorrect responses for the simple cases.

As proven in Fig. 1, we are able to revisit the summarized indicators of the three households. Wanting on the two fundamental clusters and the more serious outcomes of the shaped-up fashions on errors and problem concordance, we might rush to conclude that every one sorts of scaling up and shaping up are inappropriate for guaranteeing user-driven reliability sooner or later. Nevertheless, these results might be the results of the particular aspirations for these fashions: larger correctness charges (to excel within the benchmarks by getting extra cases proper however not essentially all the simple ones) and better instructability (to look diligent by saying one thing significant at the price of being mistaken). As an illustration, in scaling up, there’s a tendency to incorporate bigger coaching corpora42 with tougher examples, or giving extra weight to authoritative sources, which can embrace extra refined examples43, dominating the loss over extra easy examples. Furthermore, shaping up has often penalized solutions that hedge or look unsure3. That makes us ponder whether this might all be totally different.