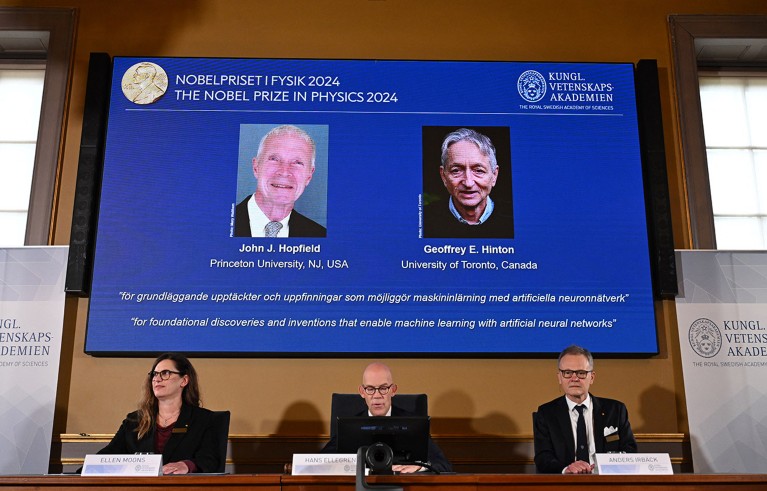

The winners have been introduced by the Royal Swedish Academy of Sciences in Stockholm.Credit score: Jonathan Nackstrand/AFP through Getty

Two researchers who developed instruments for understanding the neural networks that underpin in the present day’s growth in synthetic intelligence (AI) have gained the 2024 Nobel Prize in Physics.

John Hopfield at Princeton College in New Jersey, and Geoffrey Hinton on the College of Toronto, Canada, share the 11 million Swedish kronor (US$1 million) prize, introduced by the Royal Swedish Academy of Sciences in Stockholm on 8 October.

Each used instruments from physics to give you strategies that energy synthetic neural networks, which exploit brain-inspired, layered buildings to be taught summary ideas. Their discoveries “type the constructing blocks of machine studying, that may support people in making quicker and more-reliable choices”, mentioned Nobel committee chair Ellen Moons, a physicist at Karlstad College, Sweden, through the announcement. “Synthetic neural networks have been used to advance analysis throughout physics subjects as numerous as particle physics, materials science and astrophysics.”

Machine reminiscence

In 1982, Hopfield, a theoretical biologist with a background in physics, got here up with a community that described connections between digital neurons as bodily forces1. By storing patterns as a low-energy state of the community, the system might re-create the sample when prompted with one thing related. It grew to become generally known as associative reminiscence, as a result of the way in which during which it ‘recollects’ issues is just like the mind making an attempt to recollect a phrase or idea primarily based on associated info.

Hinton, a pc scientist, used rules from statistical physics, which collectively describes techniques which have too many elements to trace individually, to additional develop the ‘Hopfield community’. By constructing possibilities right into a layered model of the community, he created a instrument that would acknowledge and classify photographs, or generate new examples of the sort it was skilled on2.

Laptop science: The training machines

These processes differed from earlier sorts of computation, in that the networks have been in a position to be taught from examples, together with from advanced information. This could have been difficult for standard software program reliant on step-by-step calculations.

The networks are “grossly idealized fashions which are as completely different from actual organic neural networks as apples are from planets”, Hinton wrote in Nature Neuroscience in 2000. However they proved helpful and have been constructed upon broadly. Neural networks that mimic human studying type the premise of many state-of-the-art AI instruments, from giant language fashions (LLMs) to machine-learning algorithms able to analysing giant swathes of knowledge, together with the protein-structure-prediction mannequin AlphaFold.

Talking by phone on the announcement, Hinton mentioned that studying he had gained the Nobel was “a bolt from the blue”. “I’m flabbergasted, I had no thought this might occur,” he mentioned. He added that advances in machine studying “could have an enormous affect, it will likely be comparable with the economic revolution. However as a substitute of exceeding individuals in bodily energy, it’s going to exceed individuals in mental capability”.

Lately, Hinton has change into of the loudest voices calling for safeguards to be positioned round AI. He says he grew to become satisfied final yr that digital computation had change into higher than the human mind, due to its capability to share studying from a number of copies of an algorithm, operating in parallel. “Up till that time, I’d spent 50 years pondering that if we might solely make it extra just like the mind, it will likely be higher,” he mentioned on 31 Might, talking just about to the AI for Good World Summit in Geneva, Switzerland. “It made me suppose [these systems are] going to change into extra clever than us prior to I believed.”

Motivated by physics

Hinton additionally gained the Alan Turing Award in 2018 — typically described because the ‘Nobel of pc science’. Hopfield, too, has gained a number of different prestigious physics awards, together with the Dirac Medal.

“[Hopfield’s] motivation was actually physics, and he invented this mannequin of physics to know sure phases of matter,” says Karl Jansen, a physicist on the German Electron Synchrotron laboratory (DESY) in Zeuthen, who describes the work as “groundbreaking”. After a long time of developments, neural networks have change into an vital instrument in analyzing information from physics experiments and in understanding the kinds of section transitions that Hopfield had got down to examine, Jansen provides.

The right way to win a Nobel prize: what sort of scientist scoops medals?

Lenka Zdeborová, a specialist within the statistical physics of computation on the Swiss Federal Institute of Expertise in Lausanne (EPFL), says that she was pleasantly shocked that the Nobel Committee acknowledged the significance of physics concepts for understanding advanced techniques. “It is a very generic thought, whether or not it is molecules or individuals in society.”

Within the final 5 years, the Abel Prize and Fields Medals have additionally celebrated the cross-fertilization between arithmetic, physics and pc science, significantly contributions to statistical physics.

Each winners “have introduced extremely vital concepts from physics into AI”, says Yoshua Bengio, a pc scientist who shared the 2018 Turing Award with Hinton and fellow neural-network pioneer Yann LeCun. Hinton’s seminal work and infectious enthusiasm made him an incredible position mannequin for Bengio and different early proponents of neural networks. “It impressed me extremely after I was only a college pupil,” says Bengio, who’s director of the Montreal Institute for Studying Algorithms in Canada. Many pc scientists regarded the neural community as fruitless for many years, says Bengio — a significant turning level was when Hinton and others used it to win a significant image-recognition competitors in 2012.

Mind-model advantages

Biology has additionally benefited from these synthetic fashions of the mind. Might-Britt Moser, a neuroscientist on the Norwegian College of Science and Expertise in Trondheim and a winner of the 2014 Nobel Prize in Physiology or Medication, says she was “so comfortable” when she noticed the winners introduced. Variations of Hopfield’s community fashions have been helpful to neuroscientists, she says, when investigating how neurons work collectively in reminiscence and navigation. His mannequin, which describes recollections as low factors of a floor, helps researchers to visualise how sure ideas or anxieties can get mounted and retrieved within the mind, she provides. “I like to make use of this as a metaphor to speak to individuals when they’re caught.”

Ten pc codes that reworked science

At the moment, neuroscience depends on community theories and machine studying instruments, which stemmed from Hopfield and Hinton’s work, to know and course of information on hundreds of cells concurrently, says Moser. “It’s like a gasoline for understanding issues that we couldn’t even dream of after we began on this area”.

“The usage of machine-learning instruments is having immeasurable influence on information evaluation and our potential understanding of how mind circuits could compute,” says Eve Marder, a neuroscientist at Brandeis College in Waltham, Massachusetts. “However these impacts are dwarfed by the numerous impacts that machine studying and synthetic intelligence are having in each side of our every day lives.”