After a decade constructing quantum-computing {hardware}, Jacques Carolan determined he wanted a change. “I wished to work on applied sciences that have been extra instantly associated to human well being,” he says. So in 2021, he pivoted to neuroscience with the intention of constructing optical applied sciences to know the mind.

Final yr, one other alternative turned Carolan’s head. The UK authorities was organising a high-risk analysis funding company. It was modelled on the famed US Protection Superior Analysis Initiatives Company, or DARPA, which helped to pioneer a few of the world’s most consequential applied sciences, together with the Web and private computer systems. The UK model, the Superior Analysis and Invention Company (ARIA), aimed to imitate that success, besides that it avoids DARPA’s connection to navy analysis. ARIA sought its inaugural programme administrators with a seductive software query: if you happen to had £50 million in analysis funding to alter the world, what would you do?

Carolan utilized, highlighting his curiosity in growing applied sciences to deal with neurological issues. He’s now one of many first eight programme administrators at ARIA, the place he has uncommon freedom to pick the initiatives that obtain cash from a 4-year, £800-million (US$1-billion) funds. “It’s been a wild journey,” says Carolan.

US company launches experiments to search out revolutionary methods to fund analysis

Funders and scientists world wide are watching to see whether or not ARIA can achieve one of the crucial troublesome challenges in science — figuring out the right way to spur researchers to develop really revolutionary improvements.

Formally established in 2023 by an act of Parliament, ARIA is now in its first yr of full operation. Its mission is to “empower scientists and engineers to achieve for the sting of the potential and unlock breakthroughs that may profit everybody”, says its chief govt, Ilan Gur, a supplies engineer and entrepreneur who moved from Berkeley, California, to guide ARIA in London.

ARIA joins an ecosystem of funders that’s dominated by UK Analysis and Innovation (UKRI), a authorities super-funder with a £9-billion annual funds that oversees discipline-specific analysis councils, and Innovate UK, which goals to commercialize applied sciences. However the analysis councils might be conservative in what they fund, and Innovate UK primarily aids know-how switch, says Paul Nightingale, who research innovation coverage on the College of Sussex close to Brighton, UK.

“Having one thing that’s centered on pushing the boundaries of actually excessive, bold analysis — and having it managed in a DARPA kind of method — that isn’t one thing that UKRI actually can do,” he says.

UKRI was consulted extensively on ARIA’s creation, says Daniel Shah, chief of funding planning and technique at UKRI, and the organizations’ leaders join usually. “We’re trying to study from the ARIA expertise, they usually’re trying to study from us,” he says.

After the federal government introduced ARIA’s creation in 2021, some researchers criticized its planning as being sluggish and opaque. However science-policy specialists say the company has moved rapidly since ‘exiting stealth mode’, as Gur places it, final yr. Virtually all the administrators ARIA recruited have now outlined their programme’s analysis focus. Carolan’s is on precision neurotechnologies; others span subjects from synthetic intelligence (AI) security and robotics to engineering crops which are extra resilient to pests and local weather change. Some have marketed their first funding calls and made their first awards.

ARIA’s chief govt, Ilan Gur, was a programme supervisor on the US company ARPA-E.Credit score: Jessica Hallett/Nature

Enshrined within the administrators’ roles is the flexibility to “shut-down or pivot dead-end efforts or double down on people who present promise”, says ARIA’s web site — freedoms that don’t exist elsewhere in UK analysis funding.

The D-word

The thought of a ‘UK ARPA’ has been explored for years, says David Bott, an innovation specialist who holds a fellowship at Warwick Manufacturing Group, a analysis centre on the College of Warwick in Coventry, UK. Bott beforehand led a precursor to Innovate UK known as the Expertise Technique Board, and way back to 2013, he was a part of a UK authorities fact-finding mission to check DARPA. However the concept got here to fruition after showing as an election-manifesto promise made by Boris Johnson’s Conservative Get together in 2019.

Capturing the liberty of DARPA was the guideline in ARIA’s design — simply with out the concentrate on defence, says James Phillips, a methods neuroscientist who left analysis to put the coverage groundwork for ARIA within the UK Prime Minister’s workplace in 2020.

Though DARPA was based as ARPA in 1958 by the US authorities in response to the Soviet launch of the Sputnik satellite tv for pc, the defence aspect grew to become integral to the US company’s operations. Its final aim was to make sure US technological supremacy on the battlefield. A fact that many in analysis funding acknowledge is that struggle is a extra pressing incentive for progress than the extra common aim of bettering society.

However the defence focus shouldn’t be the one factor that made DARPA profitable, says Victor Reis, a retired US authorities official who directed the company from 1990 to 1991. 4 issues have been key to DARPA’s effectiveness, says Reis: freedom to make choices, transferring quick, time period limits for programme managers and the flexibility to forge relationships with an important individuals in analysis and trade.

The UK launched a metascience unit. Will different international locations observe swimsuit?

DARPA’s programme managers and workplace administrators had extra autonomy and energy than is typical at funding businesses, and Reis discovered that making fast choices on their proposals was the important thing to being an efficient head. “I mentioned I’ll signal something inside 24 hours,” he says. “That made quite a lot of blissful campers.”

Phillips’s small group particularly wished to outline the scope of ARIA’s authorized freedoms to function because it happy. For example, would the brand new company make a funding resolution that authorities disagrees with? “That was very, essential to us,” says Phillips, now a senior coverage adviser on the Tony Blair Institute for World Change, a assume tank in London. “More often than not this analysis fails, and that’s what authorities doesn’t perceive.”

Phillips’s different precedence was discovering the proper chief — somebody with intimate information of the ARPA mannequin. “The best way we described this was a bit like a yoghurt tradition. You’ve obtained to get a little bit of the outdated tradition to start out the brand new one,” he says. Gur, an early programme supervisor on the US Superior Analysis Initiatives Company–Power, a DARPA-style offshoot, was the perfect candidate, says Phillips.

Fringe of the potential

The primary cohort of programme administrators started their time period final October, and together with Gur, have a three-year time period that may be prolonged.

The administrators’ start line was to outline ‘alternative areas’ — areas “greater than a programme, smaller than a subject”, says Carolan, the place they see scope for an innovation that will be extremely consequential for society, however which lack consideration and funding.

Most of these areas have been honed into programmes, such because the one Carolan developed on neurotechnology, which is backed by £69 million. It highlights the burden of neurological illnesses, from circumstances corresponding to Parkinson’s illness to mental-health issues. Carolan hopes to assist create applied sciences that may interface with the mind on the circuit degree, as a result of most mind issues are rooted in its wiring, he says.

Important to success, say the programme administrators, is the liberty to fund a various neighborhood of scientists, technologists and companies that may work collectively in direction of a aim, though taking totally different approaches. “The way you fund science shapes the way you do science,” says Gemma Bale, considered one of ARIA’s administrators. She and others see ARIA as a part of a wave of experimentation in metascience — the science of how analysis is performed and funded.

Sarah Bohndiek (left) and Gemma Bale co-direct an ARIA programme known as Forecasting Tipping Factors.Credit score: Jessica Hallett/Nature

This month, Bale and her co-director, Sarah Bohndiek, introduced their programme, known as Forecasting Tipping Factors. Because the title suggests, it seeks to create an early-warning system for Earth’s local weather tipping factors. Bale and Bohndiek, each medical physicists who do part-time tutorial analysis on the College of Cambridge, UK, spotlight large gaps in monitoring Earth’s weak atmosphere. “We threat crossing local weather tipping factors throughout the subsequent century,” says Bohndiek. “With none early warning, we’ve got no enough method of getting ready, adapting, mitigating or intervening.”

Scientific power

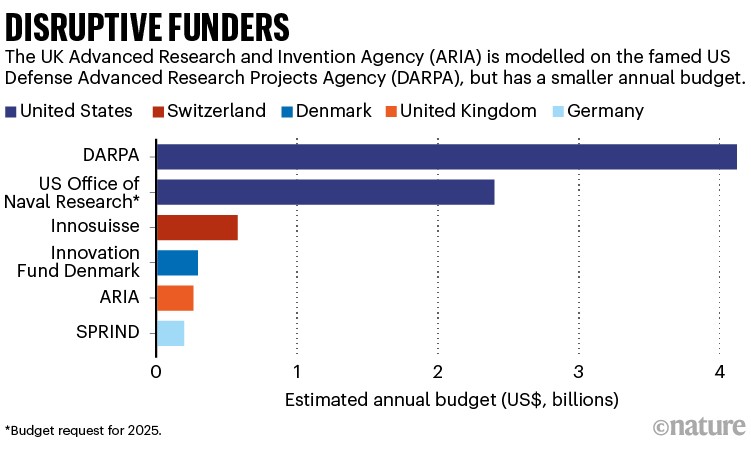

ARIA’s total annual funds is only one.2% of the UK authorities’s 2022 spending on analysis and growth. By comparability, DARPA’s, at $4.2 billion, represents 2.2% of the US federal authorities’s 2022 spend. Nonetheless, Gur sees the power of ARIA as mendacity within the scientific expertise that it may draw on.

There are a couple of comparable programmes world wide, says Nightingale, together with Innosuisse in Switzerland; Innovation Fund Denmark; and the German company SPRIND. SPRIND was established in 2019 and has an identical annual funds to ARIA — about €220 million (US$240 million) in 2024 (see ‘Disruptive funders’).

Supply: Company budgets analysed by Nature

Rafael Laguna de la Vera, SPRIND’s director, primarily based in Leipzig, says that he was consulted by Phillips’s group and speaks sometimes to the ARIA management. SPRIND’s funding strategy is extra ‘bottom-up’ than ARIA’s, says Laguna de la Vera — the German company maintains a rolling name for funding proposals. He says that the primary problem in constructing a disruptive funder has been convincing authorities ministries to present the company the liberty to function otherwise from present funders.

“Our purpose for existence is to do it otherwise than we did earlier than. So right here you’ve got the battle.” After establishing the company, Laguna de la Vera fought for 3 years to get the German authorities to move a legislation, just like ARIA’s act of Parliament, that ensures the funder freedoms in operation.

Nightingale means that the US Workplace of Naval Analysis — which is smaller than DARPA, with a $2.4 billion funds request for 2025 — is perhaps a comparable company. He says that is due to the best way it selects essentially the most promising candidates at industrial laboratories or universities, and since its outcomes have had a disproportionate influence.

First contracts

Researchers and science-policy specialists who Nature contacted say it’s laborious to judge ARIA or discover a lot to criticize to this point, provided that the company has simply began work. However a optimistic signal is that it has recruited effectively, Nightingale and others say. “It’s a really spectacular, sensible group,” says David Willetts, a former UK science minister who retains a powerful curiosity in analysis and innovation coverage.

At ARIA, Carolan is now fielding proposals for his first funding calls. Inner and exterior consultants assist to evaluation functions. However the ARIA administrators have the liberty to finally resolve who receives funding.

Jacques Carolan is director of an ARIA programme on precision neurotechnologies.Credit score: Jessica Hallett/Nature

Different programme administrators are flexing their freedoms in numerous methods. David ‘davidad’ Dalrymple, director of the £59-million programme on safeguarded AI, final month appointed artificial-intelligence pioneer Yoshua Bengio as his programme’s scientific director, to supply strategic recommendation and technical suggestions. That appointment was an early indication of success, says Phillips, given Bengio’s sought-after standing. The aim of Dalrymple’s programme is to assemble an AI ‘gatekeeper’ system that’s tasked with understanding and decreasing the dangers of different AI brokers.

Suggestions from the analysis neighborhood has additionally been optimistic. “I believe they’re doing one thing very, essential,” says Phillip Stanley-Marbell, a pc engineer whose agency Signaloid signed ARIA’s first funding contract, on bettering the effectivity of huge language fashions. Signaloid, a spin-out firm of the College of Cambridge, hopes this work will contribute to wider efforts to cease the fashions ‘hallucinating’ data.

Stanley-Marbell, who additionally has a submit on the College of Cambridge, has had a assorted profession in academia and at trade giants corresponding to Apple. The distinction along with his earlier grants is that the deliverables in his ARIA contract are particular and the contract might be terminated at any time. “It’s been a really rigorous however very nice course of — very factual and really rational,” he says.

One controversial characteristic of ARIA is that, in contrast to different UK authorities funders and DARPA, it’s not topic to freedom-of-information requests about its work. Laguna de la Vera says that exemption from such requests is a optimistic for a disruptive funder, partially as a result of many individuals making the requests would possibly misread what an company is doing. It additionally protects aggressive pursuits, he argues. “It has nothing to do with navy innovation or something, however you need to be secret, proper? As a result of we need to make this occur right here in Europe and to not be stolen in a nanosecond by individuals we don’t like.”

UK ‘DARPA’ ought to let the sunshine in

ARIA says that the exemption was debated extensively by Parliament, and it retains its “scientists’ focus the place it issues most”. The company provides that it’s dedicated to constructing within the open, and publishes data on all its programmes and funded initiatives.

Exemption from scrutiny is a profit, but additionally a threat, says Nightingale. “It could be that ARIA will spend money on areas of analysis which are controversial, after which there shall be pushback,” he says. “There are all the time trade-offs.”

At DARPA, there was an incentive to keep away from controversy, says Reis, recalling what his superiors advised him: “You are able to do no matter you need. Simply keep out of the papers.”

A decade to shine

It can take years to judge ARIA’s success, say Nightingale and others. The company’s existence is assured for ten years in legislation, and Gur says that’s the timescale over which its influence must be measured.

“One measure is a programme or a venture that we fund results in a technological breakthrough that simply modifications the dialog globally on what individuals assume is feasible,” he says.

However that may take time. On the finish of Carolan’s programme, in 4 years, he doesn’t count on to have achieved it. “The size of the programme isn’t lengthy sufficient to construct a breakthrough know-how, get it by means of the regulatory pipeline, do all of your preclinical fashions and get into people,” he says. His measure of success is “to de-risk applied sciences such that other people can choose it up on the finish of the programme”.

In future, Willetts want to see big-spending authorities departments make investments a few of their cash to develop applied sciences that originated from ARIA programmes. That “can be an ideal signal of success” that would make ARIA stand out globally, he says.

Many agree that it’s important to simply accept failure within the enviornment of high-risk analysis. Possibly out of 20 initiatives, says Nightingale, 19 received’t do something, however one may change the sport. “Failure is a characteristic, not a bug.”