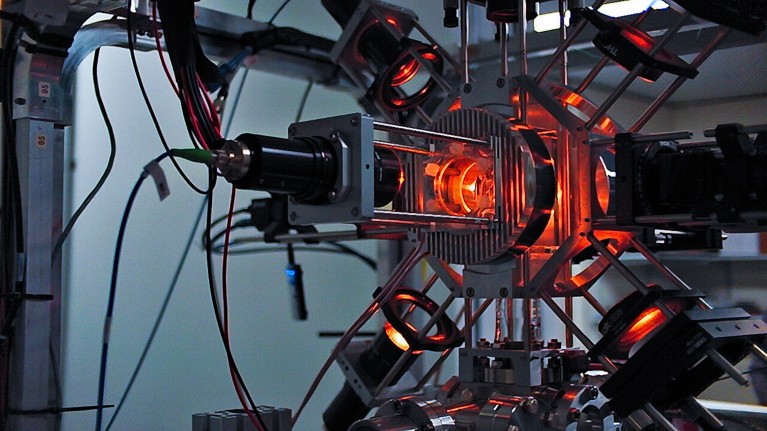

A quantum sensor that measures native gravity utilizing free-falling atoms.Credit score: Isabella Moore/New York Instances/Redux/eyevine

Quantum applied sciences maintain nice promise for aiding nationwide defence, by sharpening how nations accumulate information, analyse intelligence, talk and develop supplies and weapons. For example, quantum sensors — which use quantum behaviours to measure forces and radiation — can detect objects with precision and sensitivity, even underground or underwater. Quantum communications methods which can be proof against jamming can revolutionize command and management.

Curiosity is rising globally. For instance, in 2023, the US Division of Protection introduced a US$45-million venture to combine quantum elements into weapons methods to extend the precision of concentrating on. The nation additionally examined a quantum receiver for long-range radio communications. The UK Ministry of Defence (which funds a few of our analysis) is investing in quantum sensors and clocks. Earlier this yr, it examined a quantum-based navigation system that can not be jammed. India’s Ministry of Defence is investing in the usage of quantum ‘keys’ to encrypt delicate navy information. China can also be growing quantum capabilities for defence, together with a quantum radar system that may overcome ‘stealth’ know-how, which is designed to make aeroplanes or ships, for instance, arduous to detect utilizing typical radar.

Nevertheless, in addition to guarantees, these makes use of include moral dangers1,2 (see ‘Key dangers of utilizing quantum applied sciences in defence’). For instance, highly effective quantum computer systems may allow the creation of recent molecules and types of chemical or organic weapons. They may break cryptographic measures that underpin safe on-line communications, with catastrophic penalties for digitally based mostly societies. Quantum sensors might be used to boost surveillance, breaching rights to privateness, anonymity and freedom of communication. Quantum algorithms could be troublesome to reverse-engineer, which could make it troublesome to ascribe accountability for his or her outcomes (a ‘accountability hole’).

A few of these dangers are much like these related to the usage of synthetic intelligence (AI), which can also be getting used for defence functions. That is excellent news, as a result of it permits us to sort out the dangers by constructing on present analysis and classes learnt from AI ethics. Nevertheless, though the dangers may be related, their drivers, likelihoods and impacts differ and depend upon the distinctive traits of quantum applied sciences.

Easy methods to introduce quantum computer systems with out slowing financial progress

That’s the reason it’s essential to develop moral governance that’s targeted particularly on quantum applied sciences. To this point, defence organizations have remarked on this want — for instance, within the Quantum Applied sciences Technique from the North Atlantic Treaty Group (NATO) and the 2020 US Nationwide Protection Authorization Act. But, little work has been finished to develop an moral strategy to governance of quantum purposes in defence1,3.

Right here we start to fill this hole, and set out six ideas for accountable design and improvement of quantum applied sciences for defence. We suggest an ‘anticipatory moral governance strategy’ — that’s, to think about the moral dangers and alternatives which may come up as choices are made, from the design and improvement phases to finish use. This strategy will enable defence organizations to place in place measures to mitigate moral dangers early, relatively than paying excessive prices later.

Begin now

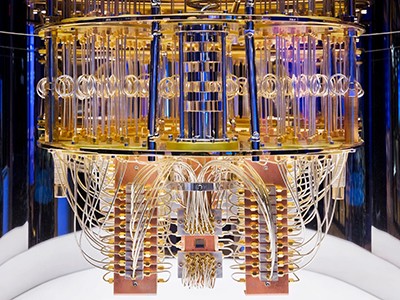

The shortage of analysis on moral governance of quantum applied sciences in defence stems from these being emergent applied sciences with various ranges of maturity. Quantum sensors, for instance, are already available on the market, however different purposes, akin to quantum computer systems, are solely now transitioning from the laboratory or are at an embryonic stage.

It’s nonetheless unclear whether or not quantum applied sciences, as soon as they’re mature, can be small, gentle and power-efficient sufficient to fulfill necessities for defence use. Many such applied sciences require subtle cooling methods, and these are cumbersome. For instance, IBM’s Goldeneye cryostat — a prototype ‘super-fridge’ designed to chill quantum computer systems to temperatures colder than outer house — weighs greater than 6 tonnes, has a quantity of virtually 2 cubic metres and requires vacuum pumps and helium isotopes to run.

Quantum sensors will begin a revolution — if we deploy them proper

Thus, defence organizations are both cautious about investing in moral analyses of applied sciences which can be nonetheless at an early stage and may not come to fruition, or they view such efforts as untimely, pointless and impractical. The previous strategy is wise — governance ought to evolve with the applied sciences. The latter strategy is harmful and conceptually mistaken.

It rests on the ‘neutrality thesis’, the concept know-how itself is ethically impartial and that implications emerge solely with its use, implying that no moral governance of quantum tech is required earlier than it’s used. That is improper — the moral implications of any know-how emerge on the design and improvement phases, that are knowledgeable by ethically loaded decisions4.

For instance, design and improvement choices dictate whether or not AI fashions are kind of interpretable5 or topic to bias6. AI governance has lagged behind the speed of improvement of these applied sciences, elevating a number of points that policymakers are solely simply starting to grapple with, from who has entry to codes to how a lot power AI methods use.

As students and policymakers have turn out to be extra conscious of the actual dangers and potential that AI methods pose, most now agree that moral analyses ought to tell all the AI life cycle. These eyeing quantum applied sciences, particularly in defence, ought to heed these classes and begin to weigh the moral dangers now.

When physicists strove for peace: previous classes for our unsure instances

Profitable governance hinges on getting the time proper for coverage interventions. That is usually portrayed as a dilemma: if governance comes too quickly, it’d hinder innovation; if it comes too late, dangers and harms may be arduous to mitigate7. However this binary view can also be mistaken. The timing of tech governance is analogue, not binary. Governance ought to accompany every second of the innovation life cycle, with measures which can be designed to help it and which can be proportionate to the dangers every second poses.

The purpose is to not curb innovation, however to manage and form it because it develops, to elicit its good potential whereas guaranteeing that this doesn’t come at the price of the values underpinning our societies.

Right here, we define six ideas for accountable design and improvement of quantum applied sciences in defence. The ideas construct on these outlined within the literature for accountable innovation of quantum applied sciences1,2, human rights and democratic values, moral conduct in conflict (simply conflict principle) and classes learnt from AI governance.

Develop a mannequin for categorizing dangers

Any defence group that funds, procures or develops quantum applied sciences ought to construct a mannequin for categorizing dangers posed by these applied sciences. This can be troublesome owing to the dearth of knowledge across the threat varieties, drivers and makes use of of quantum tech8.

Thus, for now, we recommend categorizing dangers on a easy scale, from extra to much less predictable — as ‘recognized knowns’, ‘recognized unknowns’ or ‘unknown unknowns’. Defence organizations can use these to prioritize dangers to handle and construct methods for mitigating them. The prices of moral governance would thus be proportionate to the dangers and to the extent of maturity of innovation, and justifiable.

For instance, quantum sensing poses recognized recognized dangers for privateness and mass surveillance, which could be tackled now. Defence organizations would possibly take into account when and the place these dangers would possibly happen, and the magnitude of their influence. Standards may be set within the subsequent few years for the design, improvement and use of those applied sciences to make sure that any privateness breaches stay needed and proportionate. For instance, entry to them may be tracked or restricted for states which can be recognized to violate human-rights legal guidelines.

A stealth bomber (centre) is difficult to detect utilizing typical radar.Credit score: Gotham/GC Photos

On the identical time, a company would possibly start specializing in harder-to-assess recognized unknown dangers for extra immature applied sciences. Dangers regarding the provide chain for quantum know-how are one instance. Such applied sciences require particular supplies, akin to high-purity helium-3, superconducting metals and rare-earth components, that are restricted in availability and infrequently sourced from geopolitically delicate areas. Dangers concern entry to those sources and the environmental impacts of their extraction, in addition to strategic autonomy, ought to the availability chain be disrupted due to political instability, export restrictions or supply-chain breakdowns.

NATO is boosting AI and local weather analysis as scientific diplomacy stays on ice

Mitigating these dangers would possibly require redesigning the availability chain and allocations of essential sources, in addition to discovering sustainable options for mining and processing. Governments’ geopolitical postures ought to account for these wants and keep away from disrupting business relations with strategically necessary companions. Implementing these measures will take effort and time, underscoring the necessity to handle the ethics of quantum applied sciences now, relatively than later.

By their nature, the unknown unknown dangers are troublesome to foretell, however would possibly turn out to be clear as quantum applied sciences mature and the moral, authorized and social implications turn out to be evident. The principle thought of our strategy is to behave on them as early as doable.

Defence organizations mustn’t run risk-categorization fashions by themselves9. Specialists and different events with related pursuits needs to be concerned, to make sure that the scope of modelling is broad and data is correct and well timed. The method ought to embrace physicists and engineers acquainted with how quantum applied sciences work, in addition to nationwide defence and safety practitioners. It also needs to embody specialists in worldwide humanitarian legislation, human rights, ethics of know-how and conflict, and threat evaluation.

Counter authoritarian and unjust makes use of

Malicious makes use of of quantum applied sciences pose threats, which should be recognized and mitigated. Their makes use of want oversight, and a compelling and democratic imaginative and prescient for innovation and adoption of those applied sciences needs to be developed.

Quantum hacking looms — however ultra-secure encryption is able to deploy

For instance, some state actors would possibly use quantum computing to interrupt encryption requirements for repressive functions, akin to monitoring and surveillance, or to extend the harmful energy of weapons methods. The mix of quantum applied sciences and AI additionally wants explicit consideration. Quantum computing may improve the efficiency of AI, exacerbating its present moral dangers, akin to bias, lack of transparency and issues with the attribution of accountability. On the identical time, AI may also help to detect patterns in information collected by means of quantum sensors, growing the dangers of privateness breaches and mass surveillance.

Implementing this precept means contemplating methods to restrict the entry of authoritarian governments to quantum applied sciences. This may be according to present rules for the export of applied sciences used for surveillance, such because the EU’s Twin-Use Regulation Recast.

Guarantee securitization is justified and balanced

Improvement of defence and safety applied sciences takes place in a geopolitically aggressive setting through which states, significantly adversarial ones, attempt to outcompete one another for strategic benefit. There may be advantages to international competitors if it drives innovation. However as quantum applied sciences are more and more ‘securitized’ — recognized as a nationwide safety precedence — states would possibly restrict entry to related analysis and applied sciences.

The place doable, such measures should be balanced with, and never undercut, the potential international advantages of quantum applied sciences. This implies recognizing that, though the securitization of sure applied sciences is a regrettable however needed response to geopolitical dynamics in some circumstances, it isn’t at all times needed.

‘Quantum web’ demonstration in cities is most superior but

Policymakers needs to be aware of the doable destructive penalties of a securitization strategy to quantum tech, studying from the securitization of AI. For instance, the event of AI applied sciences has been performed out as a race. As AI has matured, race dynamics and remoted and protectionist insurance policies adopted by nations akin to the USA and China have proved to be detrimental to AI’s improvement, adoption and mitigation of dangers.

Notably in defence, it has turn out to be clear that leveraging the complete potential of AI requires sharing capabilities — fostering interoperability, shared requirements and testing methods in alliances, for instance. Because of this, in 2022, NATO established the Information and Synthetic Intelligence Evaluate Board to develop for its allies “a typical baseline to assist create quality control, mitigate dangers and undertake reliable and interoperable AI methods”. Comparable connections can be wanted ought to quantum applied sciences turn out to be securitized, as appears probably.

Construct in multilateral collaboration and oversight

Defence organizations should proceed to work with, in and thru worldwide boards and organizations to determine multilateral regulatory frameworks and pointers to manipulate quantum applied sciences. As a result of the dangerous results probably arising from such applied sciences will cross borders, states mustn’t govern them in isolation.

We suggest organising an impartial oversight physique for quantum applied sciences within the defence area, much like the Worldwide Atomic Vitality Company. As with AI, such measures should be taken effectively earlier than the widespread adoption of quantum applied sciences.

Put data safety on the centre

Defence organizations ought to search to cut back the dangers of knowledge leaks surrounding delicate quantum applied sciences. This implies emphasizing data safety all through the quantum know-how life cycle. This should be finished earlier than the applied sciences mature, to mitigate the dangers of cyberattacks that intention to ‘harvest now, decrypt later’.

Promote improvement methods for societal profit

As for nuclear energy and AI, quantum applied sciences are dual-use. The defence institution ought to develop methods to help civilian purposes of quantum applied sciences to handle international challenges in areas akin to well being care, agriculture and local weather change.

That is one other lesson from AI. China’s profitable harnessing of AI has been pushed partly by a ‘fusion’ improvement technique, through which cooperation between civilian analysis organizations and defence permits the pooling of sources10. On the identical time, collaboration with civil society may also help to demystify quantum tech, fostering belief between the general public and nationwide defence and safety organizations as innovation progresses.

The anticipatory moral governance we suggest right here calls for investments in time, funding and human sources. These are key to steering the quantum transformation of defence in step with societal values. Ignoring the necessity for moral governance now to sidestep these prices is a path to failure — addressing harms, correcting errors and reclaiming missed alternatives afterward can be rather more pricey.